Using Tables to Build Better Knowledge Graphs

April 24, 2020

In the quest to teach software to understand language, scientists have mainly focused on text as a source of data to help train their algorithms. Among other things, text is used to populate a knowledge graph, which maps the relationships between phrases, referred to as entities. The knowledge graph serves as a specialty dictionary for the proposed algorithm.

“We sometimes assume knowledge graphs are perfect, but they have never been perfect,” says research scientist Shuo Zhang, who first came to Bloomberg’s AI Group as an intern while studying for his Ph.D. at the University of Stavanger in Norway, and recently joined the company full-time. His research interests focus on attempting to find new resources that can improve and add to knowledge graphs.

Now Zhang, working with Bloomberg AI researchers Edgar Meij and Ridho Reinanda during his internship, as well as University of Stavanger professor Krisztian Balog, has made important progress in showing how the entries in tables on the Web — as opposed to sentences or paragraphs of text — can be used to help build out a knowledge graph. On Friday, April 24, 2020, during The Web Conference 2020, Zhang presented the team’s work in a paper entitled “Novel Entity Discovery from Web Tables.”

“The Web as a whole is relatively unexplored territory for us,” says Meij. “This is part of our efforts to make better sense of the Web as a resource for Bloomberg and our clients.”

Zhang believes tables can be a rich resource. Before he came to Bloomberg, he’d been working extensively with tables as part of his Ph.D. work. Tables cannot only provide a wealth of information, he says, but also the information is already somewhat structured.

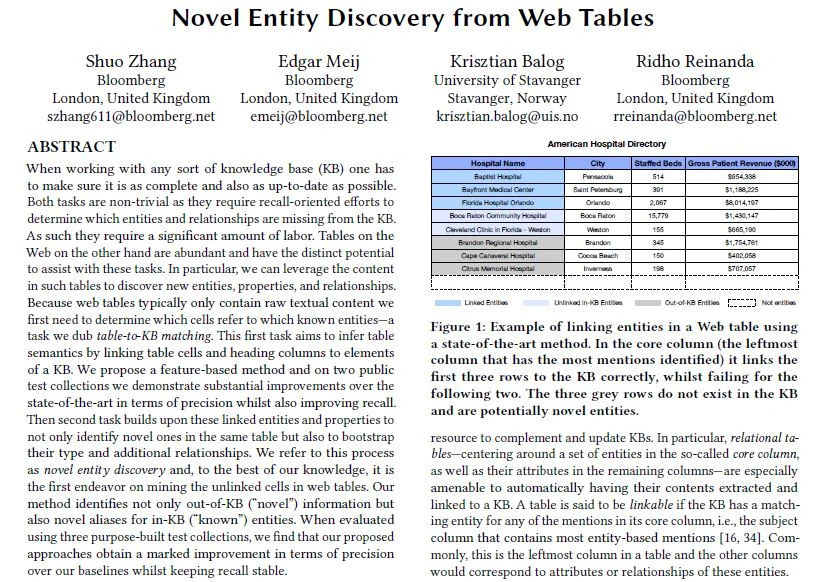

For example, in a relational table, the column on the left (the core column) often has a heading and a list of related entries. These relationships should give researchers a head start at classifying the entries and deciding if they should be treated as entities that belong in a knowledge graph, says Zhang. The title of the table should also contain helpful information.

Yet, Zhang found that many of the entries in tables are not actually contained in large knowledge graphs. Of course, some of those entries are noise – they might be graphics or strings of characters that don’t make any sense. However, many entries should be included, and Zhang’s team set out to develop a process for discovering them and linking them to an existing knowledge graph.

Often, says Zhang, an entity won’t appear in a knowledge graph simply because there is a time lag. Sometimes, less-prominent entries don’t make it in, either. A third class of entries may actually appear in the knowledge graph, but aren’t typed or linked to other entities properly.

Zhang’s team started with 50.8 million relational tables from Web Data Commons, and a knowledge graph based on Wikipedia.

The first task was to find the entities that were in the knowledge graph, but weren’t linked properly. For each mention in the core column of a table, the researchers found the top matches in Wikipedia. They then used a number of lexical and semantic measures to determine how similar those matches were to the entity in question. They used the table type as a filter to make sure there weren’t multiple similar entities that had different meanings.

Here, the team found the Wikipedia search worked very well for selecting candidates. Column values weren’t found to be terribly important. Determining the table type was more helpful later on, when researchers were trying to distinguish between two seemingly similar entities.

The second step was to try to match the column headings to properties already found in the knowledge graph. The most common column headings include phrases such as elevation, location, and industry—all relationships that should already be contained in the knowledge graph. Here, the team found that properly matching the headings relied heavily on matching the values in the column.

Even after completing this analysis, some entries still weren’t accounted for. The third step was to decide if those table entries should become entities within the knowledge graph. One determinant: Does the candidate come from a table that generally contains a lot of linkable entities, or does it come from one that doesn’t appear to be that useful? The researchers also looked at semantic characteristics of the mention, and how prominent the mention appeared to be. All of these criteria worked well together.

The final step was to make sure that any new entry was properly positioned and classified within the knowledge graph. That required grouping similar mentions and assigning them the proper type. A string of text such as DSP can refer to the chipset company or the phrase “digital signal processing,” and it has to go in the right place. Similarly, the phrases “Cisco Systems Inc,” and “Cisco,” while different, actually refer to the same company.

To test the results, the team used crowdsourcing to examine 20,000 unlinked mentions to determine if they were indeed entities, and if they were contained in the knowledge graph. Some 2,000 of these were manually examined to determine where in a knowledge graph they should appear.

Overall, the team found tables to be a rich source of novel entities that should be contained in a knowledge graph. Compared to other sources of new entities, such as news stories and social media, “Tables are much easier,” says Zhang.

Other researchers seem to share his enthusiasm, as other scientists looking to build upon this work have already contacted him. The Bloomberg team is assisting those efforts by making many of the resources used in this work available to the public. The presentation of the paper itself, which Zhang conducted online via Zoom, should only add to that excitement.