The journey to build Bloomberg’s ML Inference Platform Using KServe (formerly KFServing)

October 12, 2021

by Dan Sun, a Tech Lead for Serverless Infrastructure on Bloomberg’s Data Science & Compute Infrastructure team, and Yuzhui Liu, Team Lead of Bloomberg’s Data Science Runtimes team

This post details the beginning of Bloomberg’s journey to build a machine learning inference platform. For those readers who are less familiar with the technical concepts involved in machine learning model serving, check out “Kubeflow for Machine Learning” (O’Reilly, 2020).

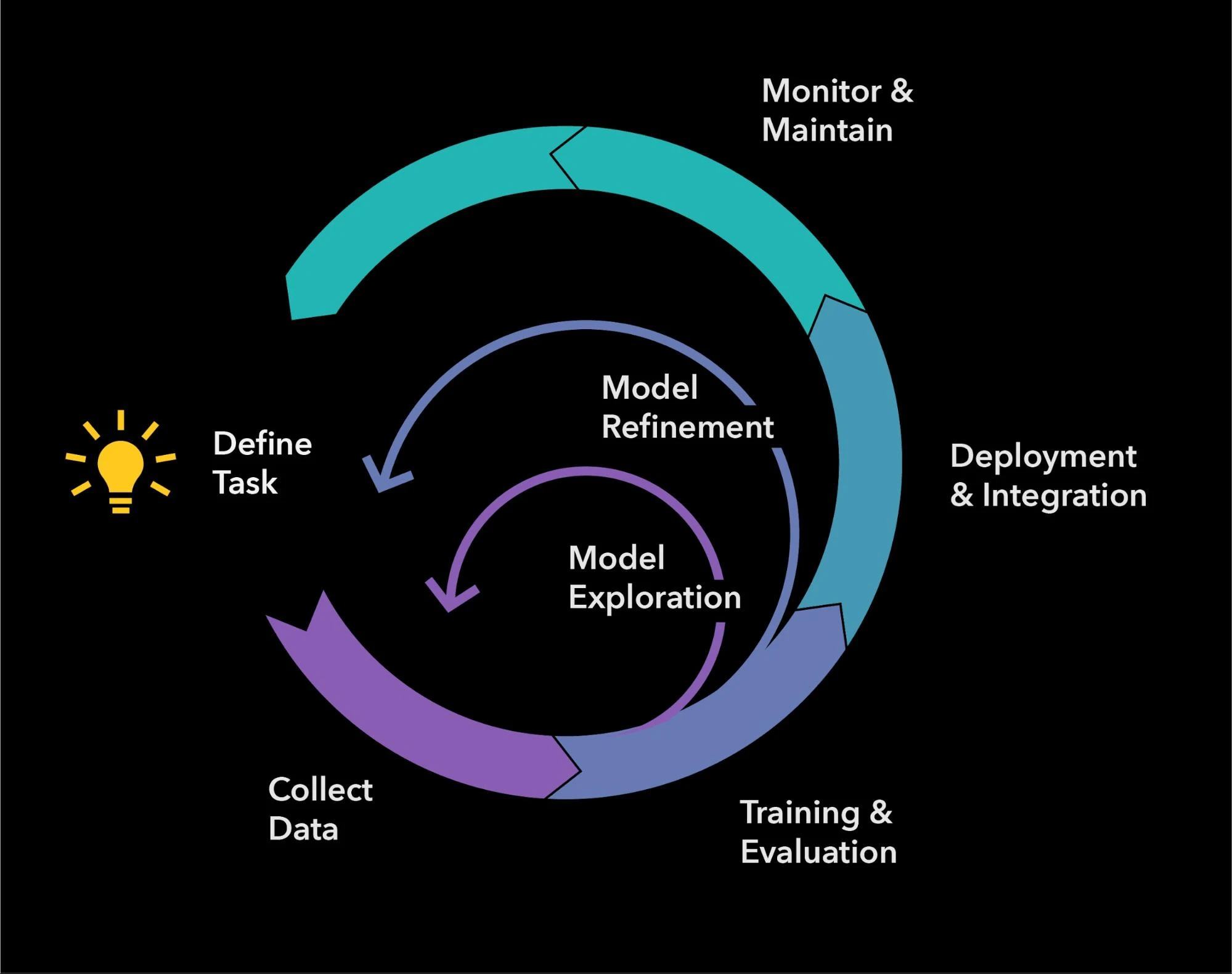

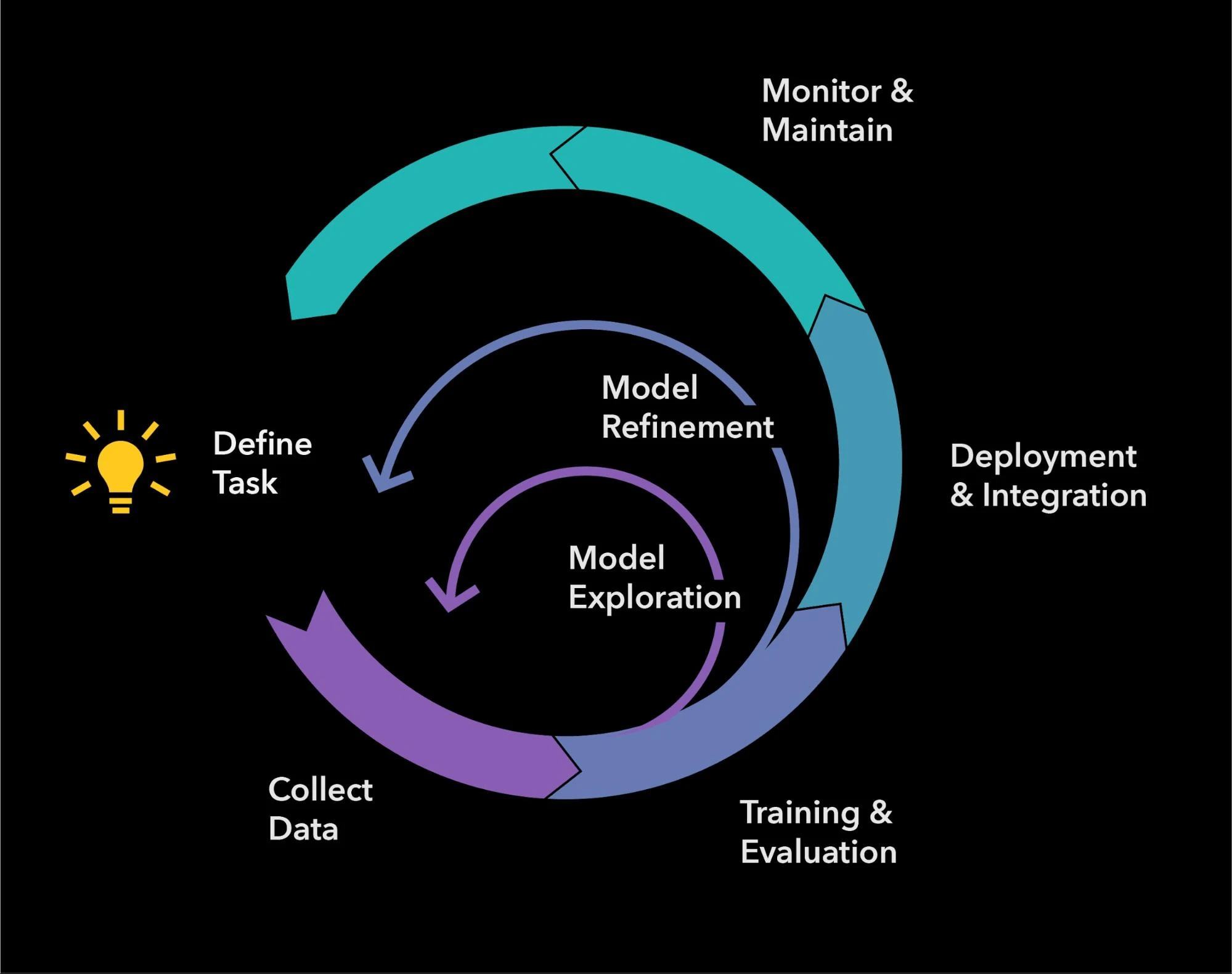

The Machine Learning (ML) Model Development Life Cycle (MDLC) can be divided broadly into two phases: a training phase, in which an ML model is fit to a specific dataset, and an inference phase, in which a machine learning model is applied to a dataset. Through a collaboration with our CTO Office’s Data Science team and AI Engineering Group, we built an internal Data Science Platform on top of Kubernetes to enable our AI engineers to train, deploy and actively update their models in production.

Why Bloomberg needed an ML Inference Platform

The power of the Bloomberg Terminal comes from our ability to analyze and structure a huge amount of textual and financial data. Dealing with this volume of data requires increasingly sophisticated artificial intelligence techniques. Over the past decade, Bloomberg has increased its investment in machine learning and natural language processing (NLP) for document understanding, recommendation, question answering, information extraction, information retrieval, sentiment analysis, anomaly detection, financial modeling, and more. We have a large number of researchers and engineers working on these problems and their teams need to serve trained models in production. Production inference is a critical step for the success of the company’s ML projects.

Deploying and scaling these ML-driven applications in production was rarely a simple task. AI engineers needed to become experts in these technical areas — in addition to their respective domain expertise:

- Model serialization

- Model servers

- Micro-services

- HTTP/gRPC

- Container builds and deployments

- GPU Scheduling

- Health checks

- Scalability

- Low latency/High throughput

- Disaster recovery

- Metrics and distributed tracing

This added significant overhead for AI engineers who use ML inference services to enable new product offerings, and it took time away that could have been better spent building new models. In addition, this effort became fragmented over time, as different teams used different ML frameworks, inference protocols, and compute resources. With every team doing this in their own way, the costs (e.g., time, power, etc.) were significant and it often took a long time to get an inference service ready for production usage.

As a result, there was a clear need for a standard managed service that could help unify the model-deployment process across ML frameworks, shorten the time-to-market for serving ML models in production, safely roll out the models when they needed updating, and reduce the complexity of implementation.

An inference platform provides a set of tools designed to simplify and accelerate the deployment and serving of the ML models. The platform manages the CPU/GPU/memory resource scheduling and utilization, as well as the complex technology stack, eliminating barriers to deployment. With fewer specialist skill requirements, teams are now able to deploy their models quicker, thus increasing their productivity.

Working with the community

ML model serving is not a problem unique to Bloomberg, so leveraging our expertise with Kubernetes, we collaborated with an open source community of AI experts from IBM, Microsoft, Google, NVIDIA, and Seldon.

IBM initially presented the idea of serving ML models in a serverless way using Knative at KubeCon + CloudNativeCon North America 2018. At the same time, Bloomberg was also exploring and experimenting with Knative for serving ML models with lambdas. We met up at the Kubeflow Contributor Summit 2019 in Sunnyvale, CA and compared notes. At the time, Kubeflow did not have a model serving component, so we decided to start a sub-project to provide a standardized, yet simple to use, ML model serving deployment solution for arbitrary ML frameworks.

Together, we developed KFServing, a cloud-native multi-framework ML model serving tool for serverless inference on Kubernetes. We truly believe that a community composed of diverse perspectives can build high-quality, production-ready open source software. KFServing debuted at KubeCon + CloudNativeCon North American 2019, where it attracted much interest from the end-user community.

KFServing is now KServe

KFServing has grown tremendously over the past two years, with many adopters running it in production. In order to further grow the KFServing project and broaden the contributor base, the Kubeflow Serving Working Group decided to move the KFServing GitHub repository out of the Kubeflow organization into an independent organization — KServe. This transition required significant effort to change code, update documentation, and avoid test regressions — and Bloomberg took the lead of ensuring all that work was completed.

Don’t miss Yuzhui Liu’s talk at 2:05 PM PDT on Tuesday, October 12, 2021, about “Serving Machine Learning Models at Scale Using KServe” during Kubernetes AI Day North America 2021 (co-located with KubeCon + CloudNativeCon North America 2021).

Bloomberg’s AI use cases and what we need from an ML Inference Platform

Our inference platform is designed to serve many teams across Bloomberg.

From an infrastructure perspective, our most important requirement is to support multi-tenancy. With dozens of teams making use of our inference platform, we need to ensure that users do not impact each others’ services, as well as secure the secrets so they are only accessible to their respective teams. Furthermore, we also need to be able to enforce quota limits for compute resources based on each team’s budget.

To standardize model deployment across teams, we need a single inference protocol and deployment API. This way, teams can deploy models in the same way, regardless of which ML framework they are using (e.g., TensorFlow, PyTorch, or even a custom, in-house model framework). To accelerate the inference for large deep learning models, we also need to support the ability to serve models on GPUs, while managing these limited resources.

After models are deployed to production, users need to have the ability to update the model or code, so canary rollouts are important to ensure safe rollout and that changes are not released to all clients at once. We also need a consistent and reliable way to monitor the models in production, so metrics and traces can be produced out-of-the-box. Teams should also be able to use the same set of dashboards and tools to monitor for and investigate any performance bottlenecks.

With teams deploying more and more models, the traditional “single model/single inference service” deployment pattern was no longer scalable or cost-effective. While some teams want to deploy hundreds or thousands of small models, it is cost-prohibitive to load them all at once or create a container to serve every trained model. Yet another use case is to share costly GPUs with multiple models.

In a future blog post, we will share how KServe fulfills our requirements and more details about the work that went into integrating KServe internally to address many security, monitoring, and internal deployment process requirements.

Takeaways

Taking AI applications to production creates multiple challenges that did not exist during the training phases. We started our production journey by working with the open source community to build a highly scalable and standards-based model inference platform on Kubernetes for Trusted AI.

We are glad to share our journey to building our inference platform with everyone and thank all the awesome KServe contributors who helped contribute to making it a robust ML inference platform for everyone.

If you want to join us, check out open roles with Bloomberg’s Data Science & Compute Infrastructure team or AI Engineering group.

Join us to build a Trusted and Scalable Model Inference Platform on Kubernetes

Please join us on the KServe GitHub repository, try it out, give us feedback, and raise issues.

Special Thanks…

Special thanks to Yao Weng, Sukumar Gaonkar, Qi Chu, Xin Fu, Milan Goyal, Ania Musial, Keith Laban, David Eis, Shefaet Rahman, Anju Kambadur, Kevin P. Fleming, and Alyssa Wright for their contributions, support, and input to this article.

About our Authors

Dan Sun is a Tech Lead for Serverless Infrastructure on the Data Science & Compute Infrastructure team at Bloomberg, where he is focused on designing and building the company’s managed ML inference solution. He loves contributing to open source and is one of the co-creators of and core contributors to the KServe project.

Yuzhui Liu is the Team Lead of the Data Science Runtimes team at Bloomberg. She is passionate about building infrastructure for machine learning applications, and is one of the core contributors to the KServe project.