SemEval-2018: We Know What the Tweet Said. But What Does it Mean?

June 05, 2018

Each year, the International Workshop on Semantic Evaluation (SemEval) is co-located with the Annual Conference of the North American Chapter of the Association of Computational Linguistics: Human Language Technologies (NAACL HLT). And each year, the workshop organizers post a challenge to the computational linguistics community that is meant to help advance the understanding of text. This year, the task asked teams to evaluate the intensity of emotion, intensity of sentiment, and valence of tweets.

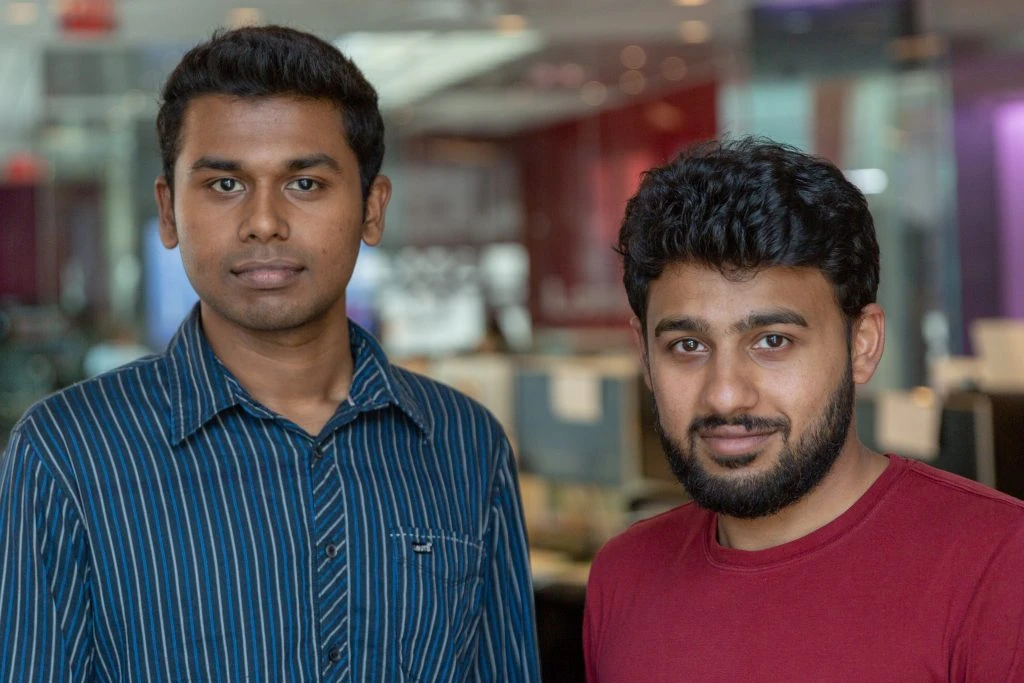

When Bloomberg software engineers and research scientists Venkatesh Elango and Karan Uppal saw the task, they knew they had to give it a shot. “It just was so very close to what we do,” says Uppal. “We could make some modifications to our existing systems and apply it to the task at hand. It was an excellent opportunity to showcase some of our work.”

The task even used a data set of a size similar to the ones they often use: between 2,000 and 3,000 tweets. Out of about 60 teams that attempted the task, Uppal and Elango placed in the Top 15 and were asked to write up their methodology and results. Their paper, “RIDDL at SemEval-2018 Task 1: Rage Intensity Detection with Deep Learning,” will be presented this afternoon during the SemEval-2018 Workshop during NAACL HLT 2018 in New Orleans.

Both Uppal and Elango work in the engineering group that does research and development for Bloomberg’s News products. Their overarching task is to analyze unstructured data and turn it into signals that might be useful to the company’s clients across the financial industry. They’ve spent a considerable amount of time analyzing the sentiment of tweets, which clients may incorporate into their trading strategies.

Uppal notes that there is always room to improve the methods for determining the sentiment of tweets about equities. He says that the very nature of the models ensure that they degrade over time. There are changes in the things people talk about and the language they use, and there are new concepts that get introduced to the market and to the world. Just a few years ago, for example, “Brexit” would have meant little to the model. While “GDPR” is not a new acronym, it wasn’t all that common or widely used until recently.

Then there are changes to Twitter itself. Tweets used to be limited to 140 characters, so researchers could safely assume that if they identified a company name within a tweet, the rest of the tweet would be about the company. Now that the maximum length of a tweet has doubled to 280 characters, “We’re seeing multiple companies, each with different sentiments in the same tweet,” says Uppal. “We want to be able to use a model that wouldn’t take everything in a tweet, but would understand which words are relevant to a particular company.”

The SemEval task required the researchers to build a complex model with limited data, which is a common problem. “With more complicated models, you often don’t have enough data to run them,” says Uppal. “Getting data hand-labeled – so that it can be used to train a model – is expensive both in time and money. Our challenge is to figure out how to leverage relatively small amounts of data, yet apply more complicated models?”

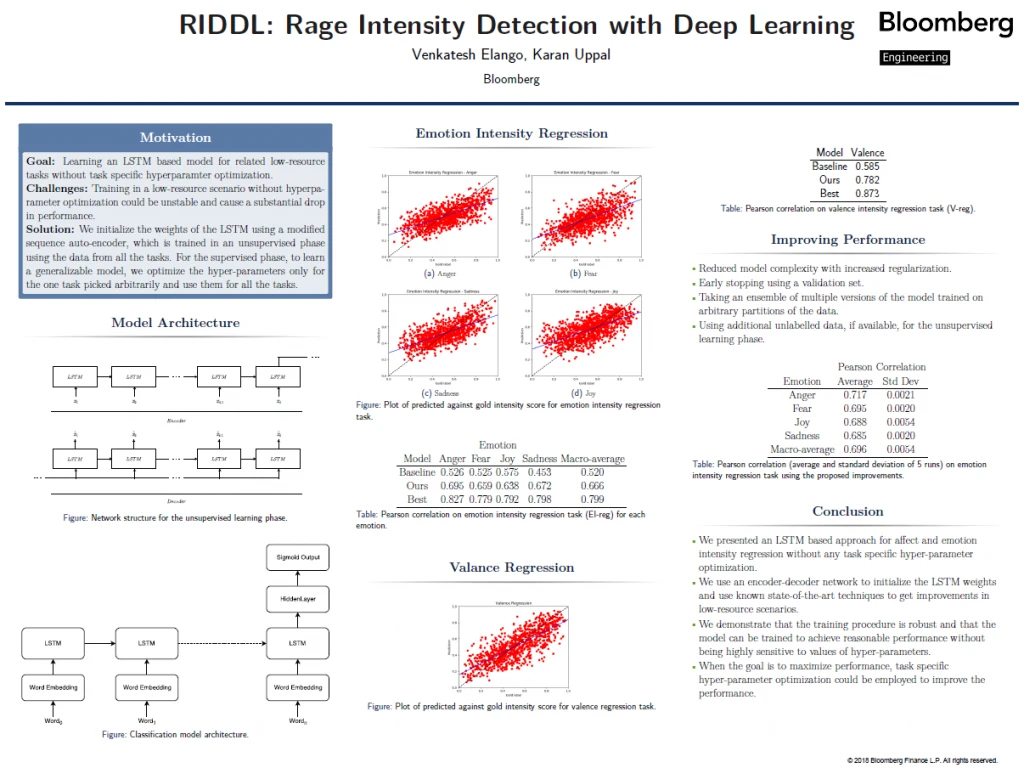

The team began by asking the algorithm to break apart unlabeled tweets and attempt to reconstruct them. The algorithm would start with a random representation of a given word, try to put it into a sentence representation, then go back to words and eventually back into a tweet. “By repeating this over and over, the model can learn which words go together based on co-location,” says Uppal. The goal: for the reconstructed tweet to match the original one.

This process gives the model a baseline understanding of the tweet. The model then only pulls in labeled data when it’s time to actually assign sentiment. On one task, says Elango, the baseline for success was a score of 58.5. He and Uppal achieved a 78.2, and the best method submitted got an 87.3.

Because the task is so similar to the work Uppal and Elango are already doing, they were quite eager to see the techniques used by the other teams. “We’ve reviewed most of them,” says Uppal. “We’re trying to come up with a way that we can better leverage the model with the unlabeled data or with news” – one which will have even more impact in helping their customers refine their trading strategies.