NLP Researchers & Engineers from Bloomberg’s AI Group & CTO Office Publish 8 Papers at ACL 2019

July 29, 2019

At the 57th Annual Meeting of the Association for Computational Linguistics (ACL 2019) this week in Florence, Italy, researchers from Bloomberg’s AI Group and Office of the CTO are showcasing their expertise in natural language processing (NLP) and computational linguistics by publishing 8 papers at the conference. Through these papers, the authors and their collaborators highlight a variety of NLP applications in finance, novel approaches and improved models used in key tasks, and other advances to the state-of-the-art in the field of computational linguistics.

We asked some of the authors to summarize their research and explain why the results were notable:

Monday, July 29, 2019

Poster Session 1B: Sentiment Analysis and Argument Mining (10:30-12:10 CEST)

Modeling financial analysts’ decision making via the pragmatics and semantics of earnings calls

Katie Keith (UMass) and Amanda Stent (Bloomberg)

Please summarize your research.

Amanda: We are interested in how different stakeholders in finance reveal their decisions or decision leanings through the language they use. In this paper, we examined how the language that financial analysts use during earnings calls can indicate their pre-call bullishness or bearishness about a company, and their post-call decisions about company price targets. Earnings call transcripts marked up by our models could help finance professionals better understand the Q&A portions of earnings calls.

Why are these results notable? How does it advance the state-of-the-art in the field of computational linguistics?

Amanda: Earnings calls are a very understudied, but rich, type of discourse. By analyzing them, NLP researchers can better model dialog that is only semi-cooperative, and how this affects the interaction, the language and the speech.

Poster Session 3E: Social Media (16:00-17:40 CEST)

Multi-task Pairwise Neural Ranking for Hashtag Segmentation

Mounica Maddela and Wei Xu (Ohio State University) and Daniel Preoţiuc-Pietro (Bloomberg)

Please summarize your research.

Daniel: Hashtags are increasingly used in social media to encode entire phrases that aim to tag and index specific content (e.g., #songsongaddafisitunes -> songs on gaddafi’s itunes). However, such conflation of words makes hashtags harder to parse automatically so they can aid with search or classification. We introduced a new data set for the task of hashtag segmentation and built a new state-of-the-art method for splitting hashtags into words using the intuition that exploiting the partial ordering between all possible segmentations provides more information than only knowing the correct segmentation.

Why are these results notable? How does it advance the state-of-the-art in the field of computational linguistics?

Daniel: This state-of-the-art algorithm, which is publicly available, is a very useful pre-processing step in several key NLP tasks, including named entity recognition, sentiment analysis, and topic classification of tweets – all of which are also products currently supported on the Bloomberg Terminal.

Tuesday, July 30, 2019

Oral Presentations Session 4D: Social Media 1 (11:10-11:30 CEST)

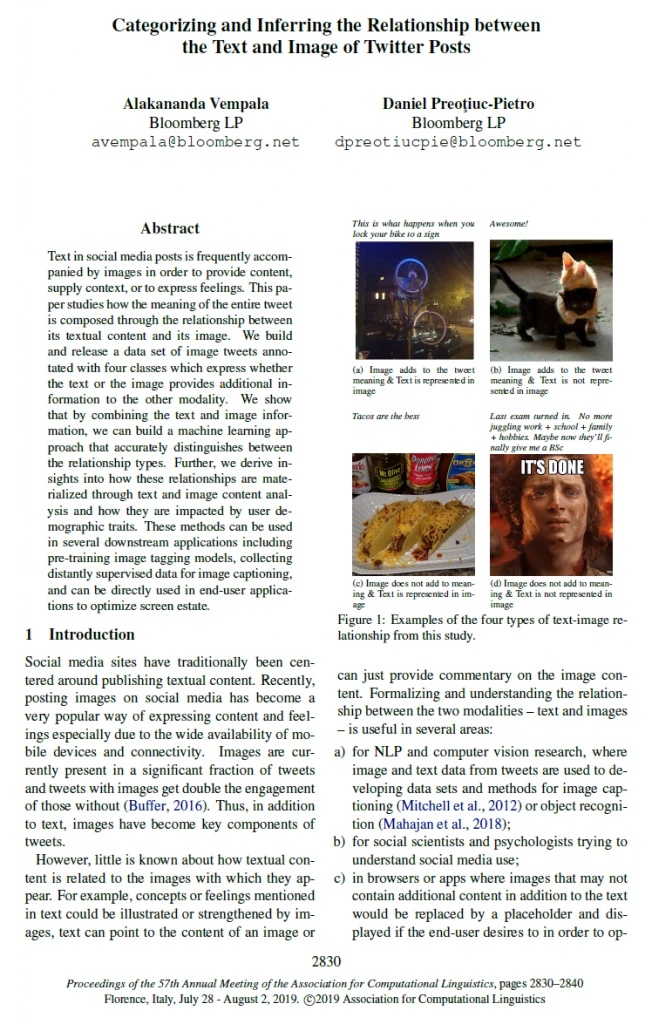

Categorizing and Inferring the Relationship between the Text and Image of Twitter Posts

Alakananda Vempala and Daniel Preoţiuc-Pietro (Bloomberg)

Please summarize your research.

Daniel: The use of images in social media is widespread. Yet, few studies have attempted to uncover how the text and images in a tweet are related and how they complement each other to form a unified meaning. This paper, inspired by a real Bloomberg Terminal use case, aims to study whether an image contributes additional information to the text of a tweet or if it overlaps with the text content. We present a corpus of 4,471 tweets annotated with text-image relationship, along with new models that automatically infer the text-image relationship.

Why are these results notable? How does it advance the state-of-the-art in the field of computational linguistics?

Daniel: This paper aims to offer a stronger foundation to several applications, including models that use large amounts of social media data to learn models for image captioning, object recognition, and creative text generation. It can also be used directly to optimize screen real estate by hiding image content that does not provide any additional contextual information.

Oral Presentations Session 4D: Social Media 1 (11:43-11:56 CEST)

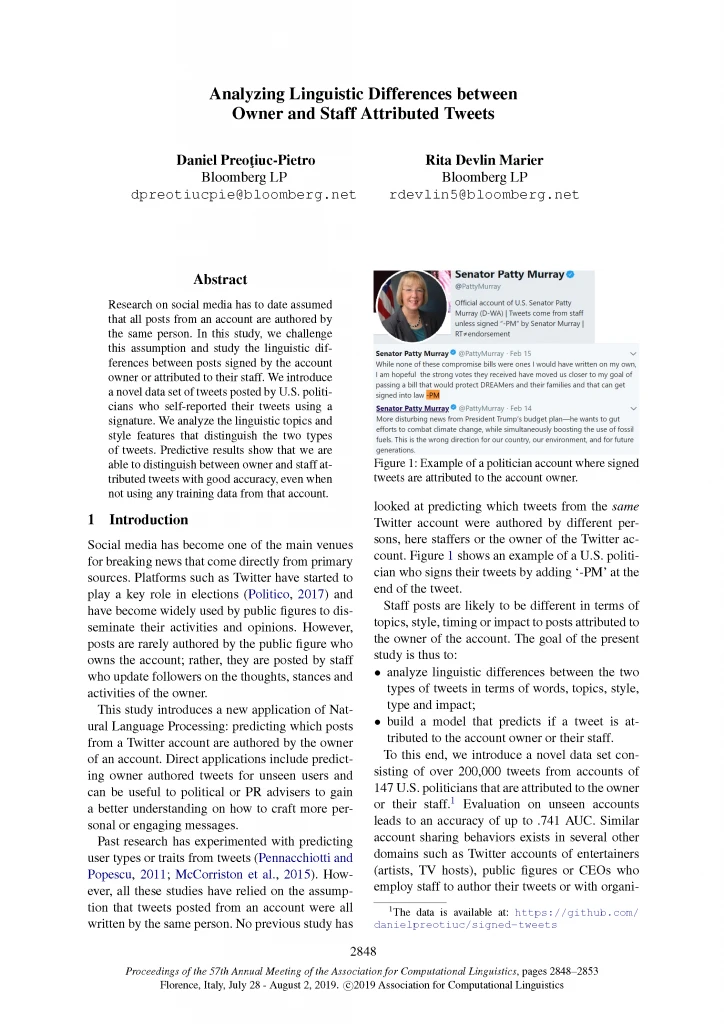

Analyzing Linguistic Differences between Owner and Staff Attributed Tweets

Daniel Preoţiuc-Pietro and Rita Devlin Marier (Bloomberg)

Please summarize your research.

Daniel: We studied differences in the textual content of tweets written by politicians and staffers who share the same Twitter account. We assembled a data set for this that was inspired by former President Barack Obama’s use of the ‘-bo’ signature to indicate his personal tweets, a practice which has been adopted by many politicians. We find that politicians add a signature if their tweets include more personal pronouns, possessives, affect (both positive and negative), or if they express congratulations, gratitude or sorrow. Our algorithm can predict signed tweets with good accuracy even for users it has never seen in training.

Why are these results notable? How does it advance the state-of-the-art in the field of computational linguistics?

Daniel: Our study aimed to challenge a tacit assumption that was made in a number of papers – such that tweets from a single account are authored by the same person. We hope this insight will be useful to other researchers in tasks they are trying to solve, as well as potentially identifying when accounts are hacked, when politicians change staff, or update their stance on policy.

Wednesday, July 31, 2019

Oral Presentations Session 7D: Social Media 2 (14:10-14:30 CEST)

Automatically Identifying Complaints in Social Media

Daniel Preoţiuc-Pietro (Bloomberg), Mihaela Găman (Politehnica Univ. Bucharest), and Nikolaos Aletras (University of Sheffield)

Please summarize your research.

Daniel: Complaints are a means to signal a breach in our expectations about an event, company or person. On Twitter, complaints are usually addressed to the company or brand that enabled it in order to damage its reputation and/or as a means of demanding reparation. We created a new data set for the task of complaint prediction by focusing on Twitter utterances addressed to customer support handles. We built machine learning models for this task that achieve high accuracy and are transferable across domains.

Why are these results notable? How does it advance the state-of-the-art in the field of computational linguistics?

Daniel: Complaints in social media had not yet been studied using statistical NLP approaches. We hope that this paper will encourage others to study this task, which is a related, yet distinct, task compared to the more popular sentiment analysis problem. Plus, it has direct applications in aiding customer support on social media.

Oral Presentations Session 8E: Information Extraction and Text Mining 4 (17:00-17:13 CEST)

A Semi-Markov Structured Support Vector Machine Model for High-Precision Named Entity Recognition

Ravneet Arora, Chen-Tse Tsai, Ketevan Tsereteli, and Anju Kambadur (Bloomberg), and Yi Yang (ASAPP)

Please summarize your research.

Ravneet: This paper introduces a new Neural Semi-Markov structured SVM model that controls the precision-recall trade-off by assigning different weights to different types of errors during training for Named Entity Recognition (NER) tasks. We compared our approach with other strong baselines and achieved better precision recall trade-off at various precision levels.

Why are these results notable? How does it advance the state-of-the-art in the field of computational linguistics?

Ravneet: Precision-recall trade-off for NER is not very well studied, even though it is critical to employ it in many applications. Our work introduces a novel approach to tune precision for NER at training, which outperforms other competitive inference-time baselines. The proposed model also offers promising future extensions in terms of directly optimizing other metrics such as Recall and F-beta.

Friday, August 2nd, 2019

ACL 2019 Workshop – Deep Learning and Formal Languages: Building Bridges

Poster Spotlight (9:45-9:51 CEST)

Grammatical Sequence Prediction for Real-Time Neural Semantic Parsing

Chunyang Xiao, Christoph Teichmann, and Konstantine Arkoudas

Please summarize your research.

Chunyang: A lot of current research is focused on using the sequence-to-sequence (seq2seq) approach for machine translation to enable machines to understand natural language by “translating” natural language into machine-readable representations, a technique known as semantic parsing. In order to be sufficiently fast, we need to limit the number of choices that the model has at every step of the translation. This paper shows how to exploit the fact that we know which semantic representations “make sense,” i.e., which ones follow the required patterns of utterance semantics. This enables us to restrict the prediction choices, which in turn greatly speeds up semantic parsing.

Why are these results notable? How does it advance the state-of-the-art in the field of computational linguistics?

Chunyang: The paper develops a generally-applicable technique for real-time neural semantic parsing. We were able to speed up a seq2seq model by more than 70% with our technique, making it feasible for real-time question answering within a complex data pipeline.