Using Phrases, Rather than Words, to Help Algorithms Derive Keywords & Summaries

June 04, 2018

It’s difficult enough for a computer to understand a simple sentence. So how do you train it to recognize even the most representative keywords of a document that might run 10 or 20 pages in length?

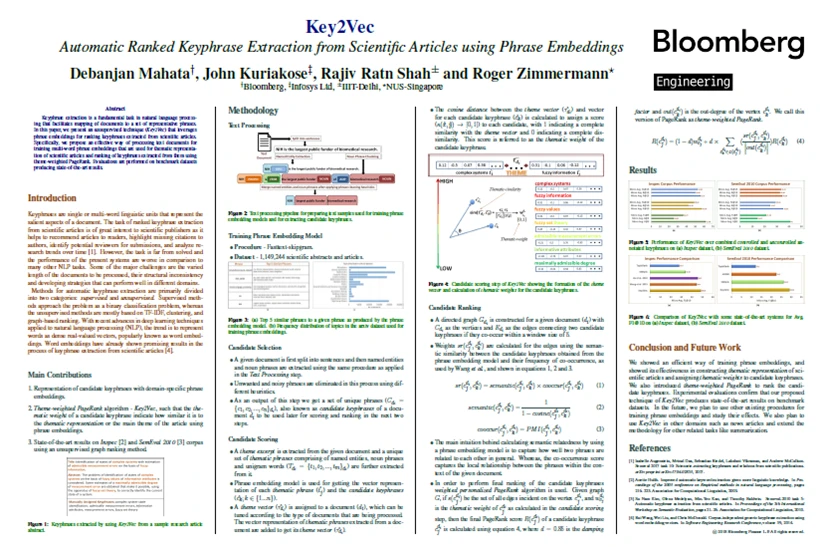

Debanjan Mahata, a senior machine learning engineer at Bloomberg, wrestles with just this problem in his research paper titled “Key2Vec: Automated Ranked Keyphrase Extraction from Scientific Articles Using Phrase Embeddings,” which is being presented later today at the 16th Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL HLT), being held in New Orleans this week.

Mahata’s is the second of three Bloomberg-authored papers to be presented at the conference this year.

The initial goal of his work was to extract keywords from a corpus of scientific documents, comprising more than 5 million articles (of which 30% did not have keywords) in order to show them for the articles that did not have them, use them for search indexing and content-based recommendations.

By making changes to the popular TextRank model with a technique he calls Key2Vec, Mahata and his co-authors were able to improve the accuracy of keywords attributed to longer documents. His work shows that even without human-annotated data, it’s possible to train domain-specific keyphrase embeddings and use them to filter and rank phrases in a document. Keyword extraction, says Mahata, is one of the fundamental challenges in natural language processing (NLP), but one at which the field is struggling to get performances similar to other fundamental tasks, such as part-of-speech tagging and named entity recognition. It’s also an important part of eventually being able to summarize a longer document and add meaningful metadata that helps in search and information retrieval.

Mahata has never attended NAACL before, but he’s excited to participate this year. Says Mahata: “This is one of the top conferences in natural language processing.”

In order to train an algorithm to come up with good keywords without human intervention, says Mahata, the general approach has been to create a graph of all the candidate words in the document based on how closely they co-occur with each other. Unsupervised graph ranking methods that are mostly variants of the PageRank algorithm are generally used for scoring these words. The top-ranked words are then considered to be the representative keywords for the given document. The recent integration of word embeddings with this framework has been shown to produce promising results.

Mahata’s innovation was to use phrase embeddings rather than word embeddings, which led to better accuracy. “You’d love to understand that ‘Barack Obama’ is one phrase, instead of ‘Barack’ as one word and ‘Obama’ as another,” explains Mahata. He proposed a tweak in parsing the sentences such that they are now represented as a combination of meaningful phrases and words that are further used for training phrase embeddings. In his graph, each node is represented as a phrase that is connected to other phrases, with the connections being weighted by how frequently they co-occur with each other, as well as their semantic relatedness. “This turned out to be very useful,” he says.

Mahata also assigned theme specific scores to each node of the graph that represented the association of that node with the overall theme of the document. The modified PageRank algorithm, which he refers to as “theme-weighted PageRank,” showed incremental improvement over previous ranking schemes using the PageRank framework. By combining these techniques, he was able to achieve results for his unsupervised method that are on par with methods that require human supervision, which have historically shown superior performance.

“There’s a need to push the envelope in unsupervised approaches to keyphrase extraction,” says Mahata. “We believe the answer lies in better representation of candidate keyphrases extracted from a document using word and phrase embeddings that can capture the main themes of the document and meaningful relationships between candidate keyphrases.”

The lessons from this research are already being applied by Mahata as part of his work on products that help companies screen the people and organizations they do business with, under a set of regulatory requirements referred to as Know Your Customer (or KYC). To comply with these rules, financial institutions may have to digest a large number of documents, from news articles to court cases, to execute the required due diligence to understand if a particular client is suitable for them.

“There is a need for summarization and keyword extraction with these documents in order to enable someone to understand what is going on with the person or organization of interest to them, especially when they may be associated with a crime, such as money laundering or fraud,” says Mahata. “We’re also looking at extending this strategy for summarization to other domains, such as news, in addition to studying the effects of training the phrase embeddings using other popular methods.”