Configuring uWSGI for Production Deployment

July 12, 2019

Engineering Manager Peter Sperl and Software Engineer Ben Green of Bloomberg Engineering’s Structured Products Applications group wrote the following article to offer some tips to other developers about avoiding known gotchas when configuring uWSGI to host services at scale — while still providing a base level of defensiveness and high reliability. It is a more detailed version of Peter’s talk “Configuring uWSGI for Production: The defaults are all wrong” delivered at EuroPython 2019.

Bloomberg’s Structured Products team is responsible for providing analytics, data, and other software tools to support the Structured Products Industry, which includes financial securities backed by Mortgages, Student Loans, Auto Loans, Credit Cards, and more. Two years ago, we began migrating our micro-services from a proprietary service framework to a WSGI-compliant one.

We chose uWSGI as our host because of its performance and feature set. But, while powerful, uWSGI’s defaults are driven by backward compatibility and are not ideal for new deployments. Powerful features can be overlooked due to the sheer magnitude of its feature set and spotty documentation. As we’ve scaled up the number of services hosted by uWSGI over the last year, we’ve had to tweak our standard configuration. New services use this standard config to avoid any known gotchas and provide a base level of defensiveness and high reliability.

We strongly recommend that all uWSGI users read the official Things to Know doc.

Note: Unbit, the developer of uWSGI, has “decided to fix all of the bad defaults (especially for the Python plugin) in the 2.1 branch.” The 2.1 branch is not released yet (as of June 2019), but it may provide some respite from the issues presented in this article.

The basics

[uwsgi] strict = true master = true enable-threads = true vacuum = true ; Delete sockets during shutdown single-interpreter = true die-on-term = true ; Shutdown when receiving SIGTERM (default is respawn) need-app = true disable-logging = true log-4xx = true log-5xx = true

These options should be set by default — but aren’t. One or two of them might not suit your deployment, but it is best to start with them ‘on’. You can then remove them later should your particular situation benefit from their removal.

Let’s look at each one, what it does, and our argument for setting it ‘on’ by default.

strict Mode

strict = true

This option tells uWSGI to fail to start if any parameter in the configuration file isn’t explicitly understood by uWSGI. This feature is not enabled by default because of some other features described in the Things to Know doc:

You can easily add non-existent options to your config files (as placeholders, custom options, or app-related configuration items). This is a really handy feature, but can lead to headaches on typos. The strict mode (–strict) will disable this feature, and only valid uWSGI options are tolerated.

We feel it is best to enable this field by default, as the risk of mistyping a uWSGI configuration parameter is greater in a production environment than the utility provided by placeholders, custom options, or app-related configuration items (which can be trivially supplied via other configuration files).

master

master = true

The master uWSGI process is necessary to gracefully re-spawn and pre-fork workers, consolidate logs, and manage many other features (shared memory, cron jobs, worker timeouts…). Without this feature on, uWSGI is a mere shadow of its true self.

This option should always be set to ‘on’ unless you are using the more complex “emperor” system for multi-app deployments or are debugging specific behavior for which you want uWSGI to be limited.

enable-threads

enable-threads = true

uWSGI disables Python threads by default, as described in the Things to Know doc.

By default the Python plugin does not initialize the GIL. This means your app-generated threads will not run. If you need threads, remember to enable them with enable-threads. Running uWSGI in multithreading mode (with the threads options) will automatically enable threading support. This “strange” default behaviour is for performance reasons, no shame in that.

This is another option that might be the right choice for you. If it is, great! You’ll see a minor speed-up, but chances are high that you’ll be using a background thread for something. Without this parameter set, those threads won’t execute and some developer will be stuck in a weird place until they “discover” this feature. It’s best to leave it ‘on’ by default and remove it on a case-by-case basis.

uWSGI has additional plugins to integrate with other asynchronous solutions, such as eventlet, gevent, and asyncio (albeit these are not ASGI-compliant).

vacuum

vacuum = true

This option will instruct uWSGI to clean up any temporary files or UNIX sockets it created, such as HTTP sockets, pidfiles, or admin FIFOs.

Leaving these files around can pose a problem under some circumstances, such as if a developer runs uWSGI as their own user, and takes ownership of these files. If the production user doesn’t have permission to delete those files, uWSGI may fail to function properly.

single-interpreter

single-interpreter = true

By default, uWSGI starts in multiple interpreter mode, which allows multiple services to be hosted in each worker process. Here is an old mailing list post regarding this feature:

Multiple interpreters are cool, but there are reports on some c extensions that do not cooperate well with them.

When multiple interpreters are enabled, uWSGI will change the whole ThreadState (an internal Python structure) at every request. It is not so slow, but with some kind of app/extensions that could be overkill.

Given the rise of microservices, we have no plans to ever host more than one service in a given worker process, and I doubt you do either. Disabling this feature appears to have no negative impact, while also reducing the odds of compatibility issues that may waste development time or cause production outages.

die-on-term

die-on-term = true

This feature is best described by the official Things to Know document.

Till uWSGI 2.1, by default, sending the SIGTERM signal to uWSGI means “brutally reload the stack” while the convention is to shut an application down on SIGTERM. To shutdown uWSGI, use SIGINT or SIGQUIT instead. If you absolutely can not live with uWSGI being so disrespectful towards SIGTERM, by all means, enable the die-on-term option. Fortunately, this bad choice has been fixed in uWSGI 2.1

You should enable this feature because it makes uWSGI behave in the way that any sane developer would expect. Without it, kill, or any tool that sends SIGTERM (such as some system monitoring tools) would attempt to kill uWSGI without success, confounding the operator of said tools.

need-app

need-app = true

This parameter prevents uWSGI from starting if it is unable to find or load your application module. Without this option, uWSGI will ignore any syntax and import errors thrown at startup and will start an empty shell that will return 500s for all requests. This is especially problematic because monitoring systems may observe that uWSGI started successfully and think the application is available to service requests when, in fact, it is not.

uWSGI continues to start without your application loaded because it thinks you may load an application dynamically later. This is the default behavior because the dynamic loading of apps used to be common. Here’s a GitHub comment from unbit, the developers of uWSGI, in reference to this behavior:

…because in 2008-2009 it was the only supported way to configure apps (the webserver instructed the application server about the app to load). If you try to go back to that time (where even the concept of proxying was not so widely approved) you will understand why it was a pretty obvious decision for software aimed at shared hosting.

Now things have changed, but there are still dozens of customers (paying customers) that use this kind of setup, and by default we do not change default unless after a looooong deprecation phase.

Logging

disable-logging = true

By default, uWSGI has rather verbose logging. It is reasonable to disable uWSGI’s standard logging, especially if your application emits concise and meaningful logs.

log-4xx = true log-5xx = true

Proceed with caution: If you do choose to disable uWSGI’s standard log output, we recommend you use the log-4xx and log-5xx parameters to re-enable uWSGI’s built-in logging for responses with HTTP status codes of 4xx or 5xx. This will ensure that critical errors are always logged, something that is very difficult for an application logger to ensure in the face of unhandled exceptions, unexpected signals, and segfaults in native code.

Worker Management

Worker Recycling

Worker recycling can prevent issues that become apparent over time such as memory leaks or unintentional states. In some circumstances, however, it can improve performance because newer processes have fresh memory space.

uWSGI provides multiple methods for recycling workers. Assuming your app is relatively quick to reload, all three of the methods below should be effectively harmless and provide protection against different failure scenarios.

max-requests = 1000 ; Restart workers after this many requests max-worker-lifetime = 3600 ; Restart workers after this many seconds reload-on-rss = 2048 ; Restart workers after this much resident memory worker-reload-mercy = 60 ; How long to wait before forcefully killing workers

This configuration will restart a worker process after any of the following events:

- 1000 requests have been handled

- The worker has allocated 2 GB of memory

- 1 hour has passed

Dynamic Worker Scaling (cheaper)

When uWSGI’s cheaper subsystem is enabled, the master process will spawn workers in response to traffic increases and gradually shut workers down as traffic subsides.

There are various algorithms available to determine how many workers should be available at any given moment. The busyness algorithm attempts to always have spare workers available, which is useful when anticipating unexpected traffic surges.

cheaper-algo = busyness processes = 500 ; Maximum number of workers allowed cheaper = 8 ; Minimum number of workers allowed cheaper-initial = 16 ; Workers created at startup cheaper-overload = 1 ; Length of a cycle in seconds cheaper-step = 16 ; How many workers to spawn at a time cheaper-busyness-multiplier = 30 ; How many cycles to wait before killing workers cheaper-busyness-min = 20 ; Below this threshold, kill workers (if stable for multiplier cycles) cheaper-busyness-max = 70 ; Above this threshold, spawn new workers cheaper-busyness-backlog-alert = 16 ; Spawn emergency workers if more than this many requests are waiting in the queue cheaper-busyness-backlog-step = 2 ; How many emergegency workers to create if there are too many requests in the queue

Hard Timeouts (harakiri)

harakiri = 60 ; Forcefully kill workers after 60 seconds

The --harakiri option will SIGKILL workers after a specified number of seconds. The entire worker process will need to be restarted, so consider other methods of timing out functions (threading, signal) if your service is very slow to spin up new workers or maintains a lot of state.

Without this feature, a stuck process could stay stuck forever. If the issue causing the hung process is common enough, you could arrive at a situation where no workers are available and your service becomes unavailable.

Harakiri can be set dynamically in Python code via the uwsgidecorators module, but this is in addition to, not instead of, the master –harakiri option, meaning the lesser of the two will be the effective timeout.

Allowing Workers to Receive Signals (py-call-osafterfork)

py-call-osafterfork = true

By default, workers are not able to receive OS signals. This flag will allow them to receive signals such as signal.alarm. This is necessary if you intend on using the signal module in a worker process.

Due to the relatively drastic nature of killing and restarting workers, we tend to use signal.alarm(seconds) to attempt to gracefully time out requests before resorting to harakiri.

This feature should be enabled by default because we expect processes to respond to signals that are sent to them. Without enabling this feature, the first developer to attempt to trap signals in a uWSGI-hosted service is going to be confused until they find this option. That could cause a delay of anywhere from 0 minutes to days, depending on how good someone is at Googling or asking coworkers for their opinions.

Process Labeling

By default, the process names of uWSGI workers are simply the command used to start them.

bgreen89 180976 180847 0 17:20 pts/27 00:00:00 uwsgi3.6 --module my_svc:service --http11-socket :29292 --ini etc/my_svc_uwsgi.ini --need-app bgreen89 180977 180847 0 17:20 pts/27 00:00:00 uwsgi3.6 --module my_svc:service --http11-socket :29292 --ini etc/my_svc_uwsgi.ini --need-app bgreen89 180978 180847 0 17:20 pts/27 00:00:00 uwsgi3.6 --module my_svc:service --http11-socket :29292 --ini etc/my_svc_uwsgi.ini --need-app

uWSGI provides some functionality which can help identify the workers:

auto-procname = true

bgreen89 188116 188115 4 17:21 pts/27 00:00:00 uWSGI master bgreen89 188191 188116 0 17:21 pts/27 00:00:00 uWSGI worker 1 bgreen89 188192 188116 0 17:21 pts/27 00:00:00 uWSGI worker 2 bgreen89 188193 188116 0 17:21 pts/27 00:00:00 uWSGI worker 3

This is helpful, but we’ll run into issues if we run more than one uWSGI instance on the same machine.

procname-prefix = "mysvc " # note the space

Now we can clearly identify which processes belong to which service.

bgreen89 41120 138777 44 17:32 pts/27 00:00:00 mysvc uWSGI master bgreen89 41172 41120 0 17:32 pts/27 00:00:00 mysvc uWSGI worker 1 bgreen89 41173 41120 0 17:32 pts/27 00:00:00 mysvc uWSGI worker 2 bgreen89 41174 41120 0 17:32 pts/27 00:00:00 mysvc uWSGI worker 3

It can be helpful to identify what each worker is doing without having to look through logs. uWSGI exposes a setprocnamefunction via its Python API which allows the service to dynamically set its process name. This allows one to add application-specific context about each worker. This additional information can be invaluable in an outage when someone is quickly trying to identify the troublesome resource.

bgreen89 41120 138777 44 17:32 pts/27 00:00:00 mysvc uWSGI master bgreen89 41120 138777 44 17:32 pts/27 00:00:00 mysvc username /path/to/url uWSGI worker 1 bgreen89 41172 41120 0 17:32 pts/27 00:00:00 mysvc johndoe /index.html uWSGI worker 2 bgreen89 41173 41120 0 17:32 pts/27 00:00:00 mysvc janedoe /assets/data uWSGI worker 3

The complete config

[uwsgi] strict = true master = true enable-threads = true vacuum = true ; Delete sockets during shutdown single-interpreter = true die-on-term = true ; Shutdown when receiving SIGTERM (default is respawn) need-app = true disable-logging = true ; Disable built-in logging log-4xx = true ; but log 4xx's anyway log-5xx = true ; and 5xx's harakiri = 60 ; forcefully kill workers after 60 seconds py-callos-afterfork = true ; allow workers to trap signals max-requests = 1000 ; Restart workers after this many requests max-worker-lifetime = 3600 ; Restart workers after this many seconds reload-on-rss = 2048 ; Restart workers after this much resident memory worker-reload-mercy = 60 ; How long to wait before forcefully killing workers cheaper-algo = busyness processes = 128 ; Maximum number of workers allowed cheaper = 8 ; Minimum number of workers allowed cheaper-initial = 16 ; Workers created at startup cheaper-overload = 1 ; Length of a cycle in seconds cheaper-step = 16 ; How many workers to spawn at a time cheaper-busyness-multiplier = 30 ; How many cycles to wait before killing workers cheaper-busyness-min = 20 ; Below this threshold, kill workers (if stable for multiplier cycles) cheaper-busyness-max = 70 ; Above this threshold, spawn new workers cheaper-busyness-backlog-alert = 16 ; Spawn emergency workers if more than this many requests are waiting in the queue cheaper-busyness-backlog-step = 2 ; How many emergency workers to create if there are too many requests in the queue

Additional uWSGI features

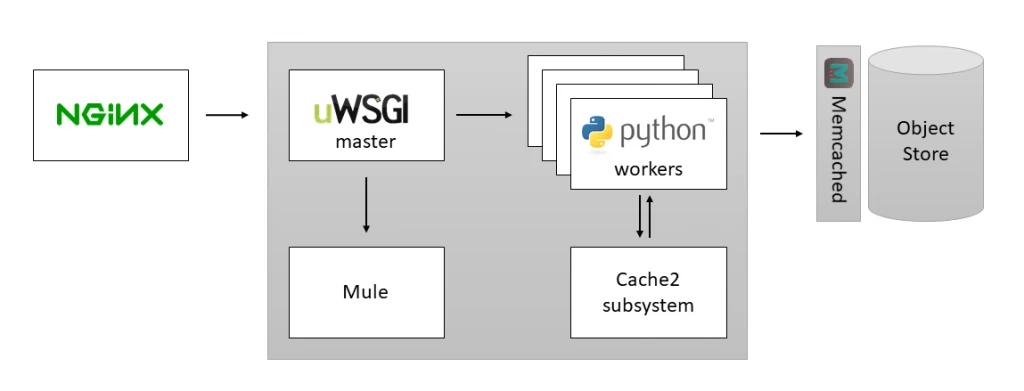

uWSGI provides numerous other features that solve common problems faced by service developers. While using these features locks your application into using uWSGI as your host, they can also reduce total system complexity when compared to external solutions.

Cron/Timer

The uWSGI master process can manage the periodic execution of arbitrary code. This is a helpful alternative to manually managing another process.

- Timers execute code regularly at an interval beginning on service startup.

- Crons execute code on a specified minute, hour, day, etc.

- A

uniqueversion of these functions is available to ensure long-running timers/crons do not start until the previous instance has finished.

import uwsgi

from . import do_some_work

def periodic_task(signal: int):

print(f"Received signal {signal}")

do_some_work()

# execute every 20 minutes on the first available worker

uwsgi.add_timer(99, 1200)

# execute on the 20th minute of every hour, every day

# minute, hour, day, month, weekday

uwsgi.add_cron(99, 20, -1, -1, -1, -1)

These functions are also available as decorators from the uwsgidecorators module:

import uwsgidecorators

@uwsgidecorators.timer(1200)

def periodic_task(signal: int):

print(f"Received signal {signal}")

do_some_work()

Note that the uwsgi and uwsgidecorators modules are injected into the runtime when running your service with uWSGI. These imports will fail in an ordinary Python interpreter, so you may need to write defensive code:

try:

import uwsgi

import uwsgidecorators

except ImportError:

UWSGI_ENABLED = False

uwsgi = uwsgidecorators = None

else:

UWSGI_ENABLED = True

if UWSGI_ENABLED:

pass # do uWSGI only logic here

Also, note that this module does not provide support for one-shot timers, they are repeating events. Refer to the signalmodule for one-shot timers.

Locks

The uWSGI python module exports lock and unlock functions (with unfortunately no context manager) to manage synchronizing critical sections between workers.

import uwsgi

def critical_code():

uwsgi.lock()

do_some_important_stuff()

uwsgi.unlock()

Cache system

The uWSGI master process can provide an in-memory cache to share between your workers which is capable of storing simple key-value byte strings. We’ve used this to smooth out bursts of traffic across clusters by tracking a rolling window of requests based on elements from the decoded request body and asking an upstream reverse proxy to try a different host if the current host is swamped with requests.

To create a cache with key sizes of 20 bytes and value sizes of 8 bytes:

--cache2 name=mycache,items=150000,blocksize=8,keysize=20

Be careful: Keys or values that are too big for the cache will silently fail to insert.

Example Python API usage:

import uwsgi # again, only works in the context of an active uwsgi process

uwsgi.cache_set(key, value, timeout)

uwsgi.cache_get(key)

uwsgi.cache_inc(key, amount) # atomic increments!

uwsgi.cache_dec(key, amount)

Mules

uWSGI mules are extra processes designated to handle tasks asynchronously from the workers serving requests.

You can start one with --mule and send tasks to it in your Python code:

from uwsgidecorators import timer

@timer(60, target="mule") # execute this function every minute on an available mule

def do_some_work(signak: int) -> None:

pass

A mstimer decorator also exists for running timers at millisecond granularity.

To designate a function within foo.py to be run continuously by a mule:

import uwsgi

def loop():

while True:

msg = uwsgi.mule_get_msg() # blocks until a uwsgi worker sends a message

print(f"Got a message: {msg}")

if __name__ == '__main__':

loop()

Then add --mule=foo.py to your uWSGI start command.

You can send messages to mules with the uwsgi.mule_msg API. Note that messages must be byte literals.

Conclusion

uWSGI is in a unique position in terms of the wealth of features it provides, but also the unexpectedness of its defaults. uWSGI 2.1 might provide some respite, but until then, it has the interesting property of changing the behavior of your code out-of-the-box. Threads are disabled, signals are ignored or handled outside of specification, some C modules may not work, and your service can fail to load, but still start. Discovering these behavioral changes will waste developer time at best, and cause production outages at worst. Configuring uWSGI to avoid these issues is the responsible thing to do.

Once the above concerns have been controlled for, uWSGI’s impressive feature set can then be leveraged to build applications in a reliable and efficient way. Mules, timers, and its cache and logging subsystems provide functionality inside your service that would otherwise require additional external components. Leveraging the solutions provided by uWSGI allows developers to deploy these components in an atomic package, simplifying deployment and total system complexity.