Bloomberg’s AI Researchers & Engineers Publish 3 Papers at SIGIR & ICTIR 2021

July 12, 2021

During the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2021) and co-located 7th ACM SIGIR International Conference on the Theory of Information Retrieval (ICTIR 2021) this week, researchers and engineers from Bloomberg’s AI Group are showcasing their expertise in Information Retrieval (IR) by publishing 3 papers.

In addition, Bloomberg AI Research Scientist Shuo Zhang is one of the organizers of the Workshop on Simulation for Information Retrieval Evaluation (Sim4IR) on July 15, 2021, the goal of which is to create a forum for researchers and practitioners to promote methodology development and more widespread use of simulation for evaluation.

AI Research Scientist and Bloomberg Knowledge Graph Team Lead Ridho Reinanda will also be hosting a student session during the SIGIR Symposium on IR in Practice (SIRIP), formally known as the SIGIR Industry Track, on July 14-15, 2021.

In these papers, the authors and their collaborators — who include Professor Maarten de Rijke of the University of Amsterdam, a past recipient of the Bloomberg Data Science Research Grant — present contributions to fundamental information retrieval problems, and to applications of NLP technology focused on narrative creation, web table research and the evaluation of task-oriented dialogue systems.

We asked the authors to summarize their research and explain why the results were notable in advancing the state-of-the-art in the field of computational linguistics:

Sunday, July 11, 2021

ICTIR 2021

Session 1C – Content Analysis (16:30 CEST / 10:30 AM EDT)

News Article Retrieval in Context for Event-centric Narrative Creation

Nikos Voskarides (Amazon/University of Amsterdam), Sabrina Sauer (University of Groningen), Maarten de Rijke (University of Amsterdam), Edgar Meij (Bloomberg)

Please summarize your research.

Edgar: We find that journalists increasingly use automation in order to find relevant content when writing articles, especially in the context of real-world events. Such content may come from social media, political speeches and other transcripts, or from other news sources. In this paper, we propose a method that effectively retrieves relevant earlier news stories for a current event and partially authored narrative. The method incorporates and combines various rankers, including one to promote recent content, a term-based one, and one derived from a transformer-based language model that aims to promote semantically similar matches.

How will this research help advance the state-of-the-art in the field of information retrieval?

Given the novelty of the task, no existing benchmarks exist. So, we define a procedure to simulate incomplete narratives and relevant articles for an event based on historic events and news stories. We find that state-of-the-art lexical and semantic rankers are not sufficient for this task, and also show that combining those with a ranker that ranks articles by reverse chronological order attains the best performance.

Wednesday, July 14, 2021

SIGIR 2021

Poster Session 3 (12:00-1:00 PM EDT and 9:00-10:00 PM EDT)

Simulating User Satisfaction for the Evaluation of Task-oriented Dialogue Systems

Weiwei Sun* (Shandong University), Shuo Zhang* (Bloomberg), Krisztian Balog (University of Stavanger), Zhaochun Ren (Shandong University), Pengjie Ren (Shandong University), Zhumin Chen (Shandong University) and Maarten de Rijke (University of Amsterdam).

(* equal contributions)

Please summarize your research.

Shuo: The primary goal for this research is to build human-like simulators for the evaluation of task-oriented dialogue systems, which intend to assist humans with accomplishing a particular task, like making a hotel reservation or booking a flight. Prior user simulators can only predict the next user action. Our aim is to enable a more human-like user simulator that can also predict user satisfaction based on previous agent replies.

To overcome the lack of annotated data for enabling this kind of user simulator, we constructed a user satisfaction annotation dataset that includes 6,800 dialogues sampled from multiple domains, spanning real-world e-commerce dialogues, task-oriented dialogues from Wizard-of-Oz experiments (human-human conversations), and movie recommendation dialogues. All user utterances in these dialogues, as well as the dialogues themselves, have been labeled based on a 5-level satisfaction scale.

Why is this research notable? How will it help advance the state-of-the-art in the field of information retrieval?

User simulation is one of the most widely used tools to enable offline training and evaluation of dialogue systems. Unlike user simulators that are primarily used to train dialogue agents, we investigated the possibility of making a human-like user simulator for use in the evaluation process, which aims to automatically measure the quality of a given dialogue system.

Being human-like is subjective, but user sentiment, emotion, or satisfaction are, without a doubt, important aspects. For example, user satisfaction will change over the course of a dialogue, and low user satisfaction (e.g., because of the inability of the agent to understand the user’s intent), might result in their terminating the conversation.

The research we built simulates user satisfaction to help evaluate task-oriented dialogue systems. User simulators built based on our dataset are certainly less expensive than human evaluation in estimating satisfaction, while also being more scalable than test collections. This resource can be used in many different ways, like human-machine hand-off prediction when a bot keeps giving unsatisfactory replies or user studies.

Poster Session 3 (12:00-1:00 PM EDT and 9:00-10:00 PM EDT)

WTR: A Test Collection for Web Table Retrieval

Zhiyu Chen (Lehigh University), Shuo Zhang (Bloomberg), Brian D. Davison (Lehigh University)

Please summarize your research.

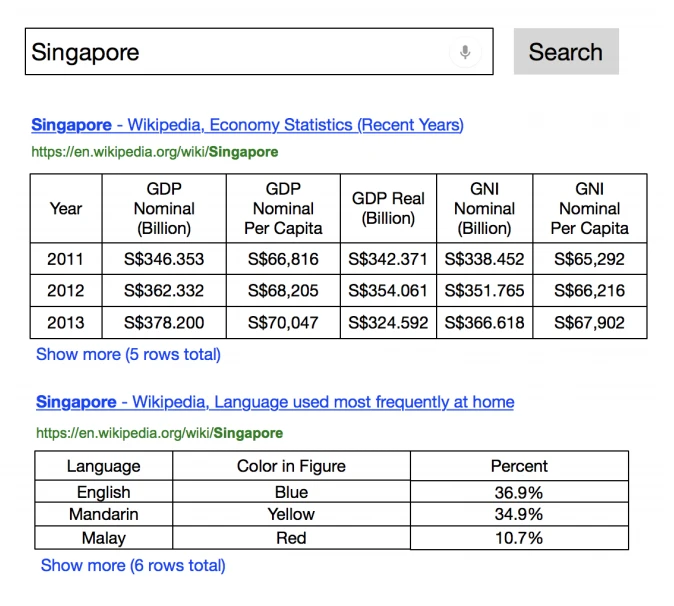

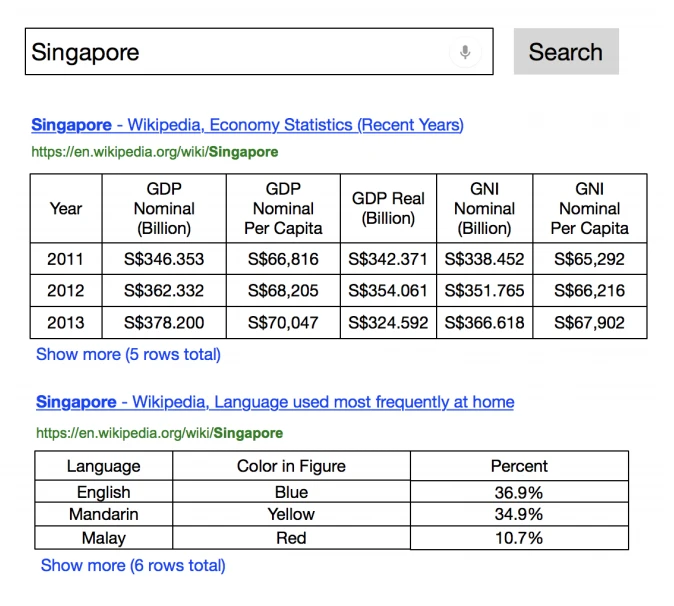

Shuo: As an important branch of information retrieval research, table retrieval aims to return a ranked list of tables from a collection of tables in response to a natural language or keyword query (see Figure 1 for an example). This area is gaining more research attention in recent years. As an emerging research area, table retrieval still calls for new test collections. Given the lack of a proper table retrieval benchmark due to web tables coming in many different formats, we built a test collection for the task of web table retrieval, which uses a large-scale Web Table Corpora extracted from the Common Crawl.

Why is this resource notable? How will it help advance the state-of-the-art in the field of information retrieval?

The first widely used ad hoc table retrieval benchmark, WikiTables, was created by me and my Ph.D. supervisor, Professor Krisztian Balog (cf. Ad Hoc Table Retrieval using Semantic Similarity). Many researchers have evaluated their methodologies based on this benchmark.

WikiTables is primarily based on Wikipedia tables. However, arbitrary web tables could be “dirtier” than Wikipedia tables and thus pose extra challenges for this task. It is unclear how state-of-the-art table retrieval models perform when encountering these web tables. This research certainly fills the gap and will help answer this question. Future research on table retrieval will stand to benefit in many ways from this new benchmark (e.g., in fairly estimating the generalizability of their models).

Apart from additional information in the form of table types, a web table usually contains rich context information such as the page title and surrounding paragraphs. We not only provide relevance judgments of query-table pairs, but also the relevance judgments of query-table context pairs with respect to a query, which are ignored by previous test collections. To facilitate future research with this benchmark, we provide details about how the dataset is pre-processed and also baseline results from both traditional and recently proposed table retrieval methods. Our experimental results show that proper usage of context labels can benefit previous table retrieval methods.