Bloomberg Researchers Present at the 2nd KDD Workshop on Anomaly Detection in Finance

August 06, 2019

Within the finance industry, detecting rare events — or anomalies — in data is key to solving business problems that can have high monetary costs such as financial crimes detection and risk modeling. On Monday, August 5, 2019, at the 2nd KDD Workshop on Anomaly Detection in Finance, which is co-located with the 25th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD 2019) in Anchorage, Alaska this week, Bloomberg researchers showcased some of their research on calibrating anomaly detectors and textual outlier detection in financial reporting.

Through their work, Bloomberg researchers and their collaborators have highlighted techniques that provide more refined data analysis within the finance industry. Adrian Benton, Senior Research Scientist in Bloomberg’s AI Group, published “Calibration for Anomaly Detection,” while Machine Learning Engineer Leslie Barrett was the lead author on a paper titled “Textual Outlier Detection and Anomalies in Financial Reporting,” which summarizes research by a team of Bloomberg Law engineers. This paper was presented by Sidney Fletcher, one of the authors, during the conference.

“Bloomberg has ongoing investments that foster collaborations between academia and institutions, and this workshop provides an opportunity for participants to continue working together to develop solutions that will address existing business problems within finance,” explained Anju Kambadur, head of Bloomberg’s AI Group, who helped organize the Workshop on Anomaly Detection in Finance.

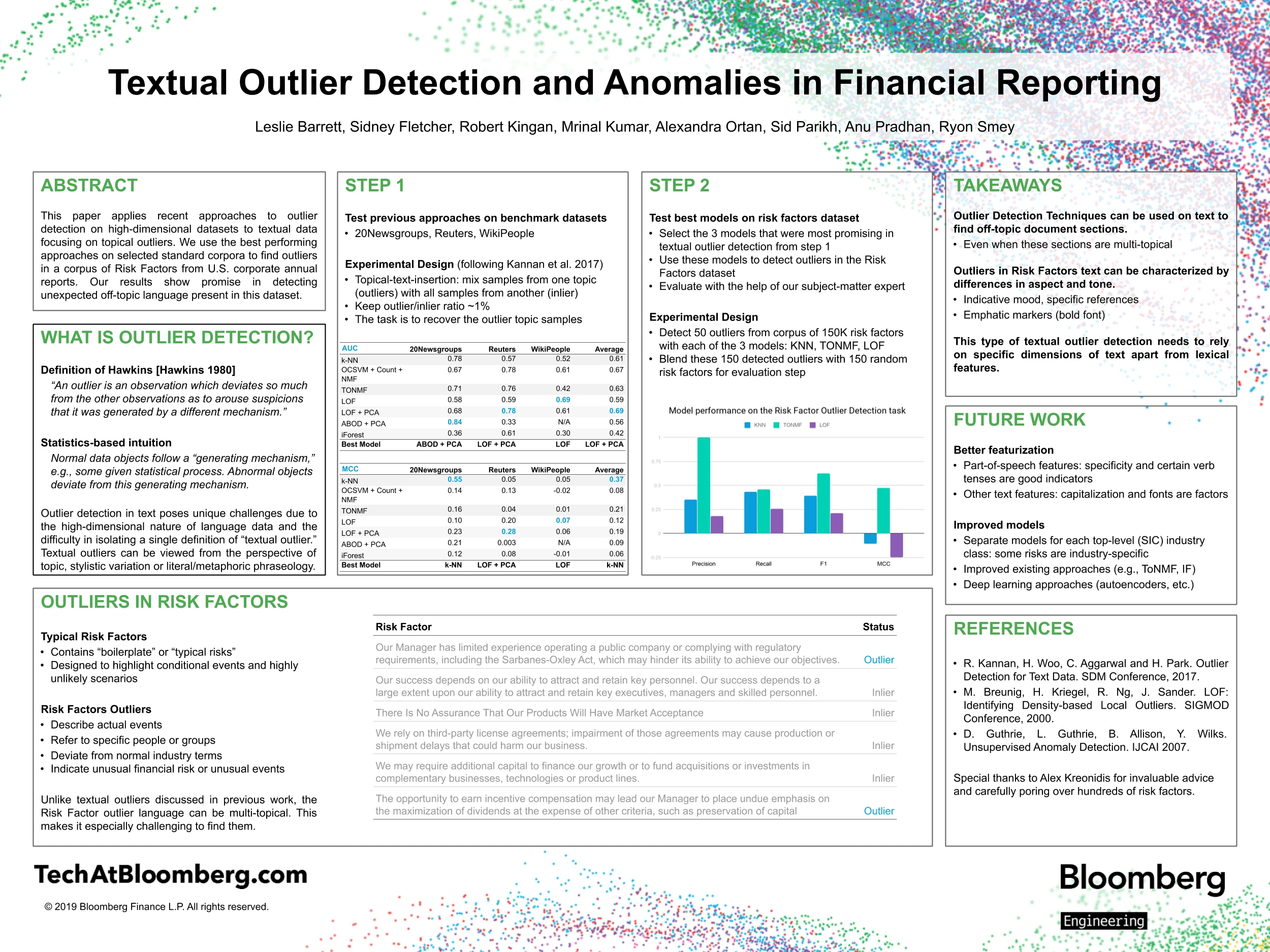

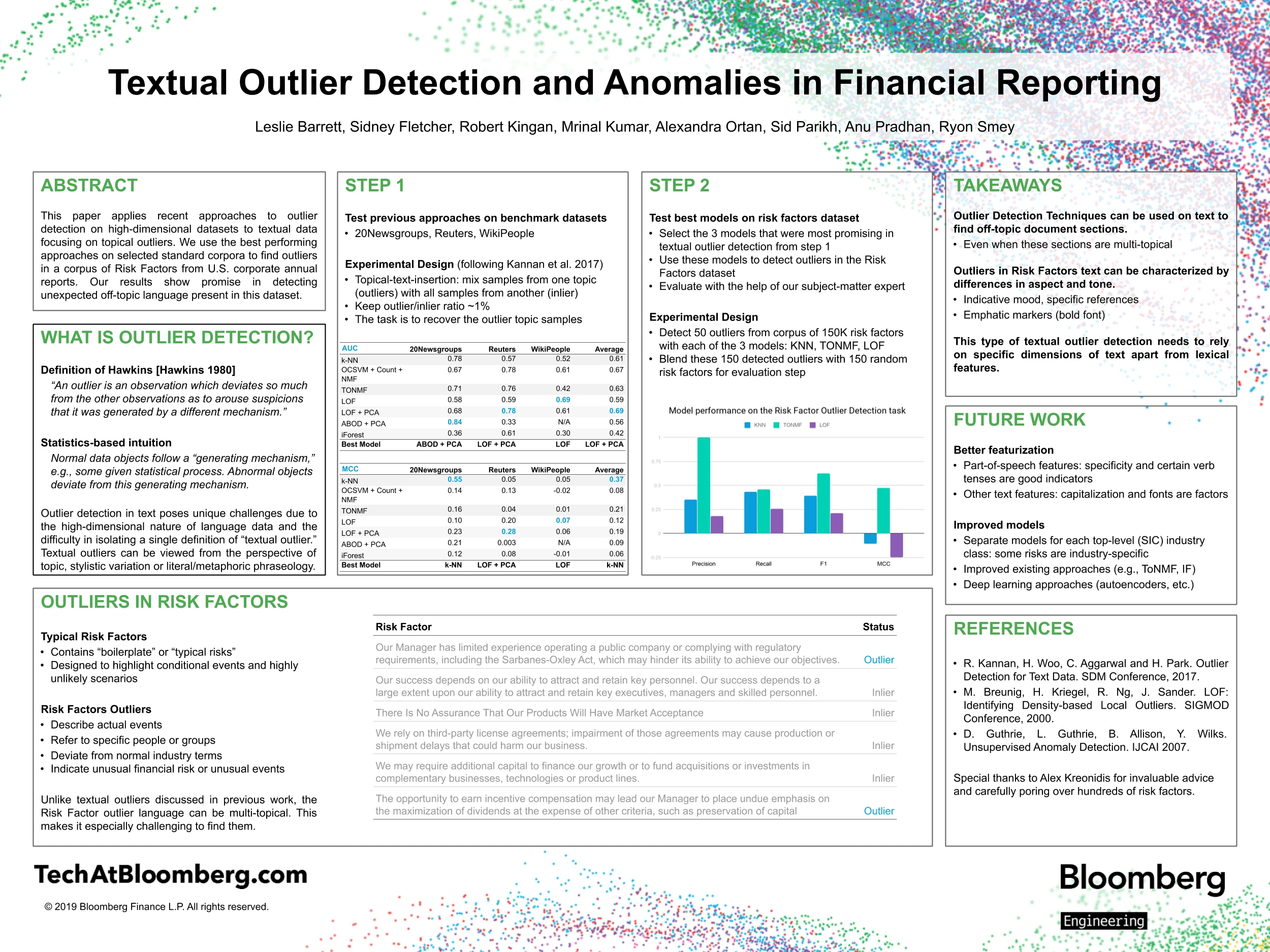

Textual Outlier Detection and Anomalies in Financial Reporting

It can be time-consuming for a financial analyst following a company to review SEC filings associated with that entity every quarter. However, if the analyst received an alert when something unusual has occurred, they could then conduct a more in-depth review on that document.

Unusual events described in the text are outliers — or observations that deviate from the normal expected descriptions of corporate risk. Such events may be associated with unexpected management changes, litigation or earnings decline. Outlier detection models are typically unsupervised, calculating distances or densities with respect to certain focal points in the data.

The high-dimensional nature of text makes distance calculations challenging, and outlier detection in text relies on a specific subset of models suited to this task. Furthermore, the concept of an outlier in text can be defined in different ways, including stylistic or factual outliers, requiring an understanding of a word’s meaning in relation to its surrounding text.

Similar to credit card fraud, which identifies unusual spending behavior, risk factors that represent outliers tend to contain very specific language with unusual phraseology, noted Barrett. The “normal” risk factor language tends to be very vague, but specific language and reference tends to indicate high-risk events.

To perform outlier detection, the text is first converted to a mathematical representation from which word-co-occurrence distances can be calculated. In the general approach, a centroid is calculated and words that are very far from that centroid don’t fit in. Those become the outliers that we flag.

“In our research, we found the best model was based on non-negative matrix factorization, which is a fancy way of saying you’re trying to reconstruct a piece of data. Where you can’t do it, the “residuals” — or parts that don’t fit — tend to be associated with anomalous language,” said Barrett. “We’re looking at these very closely and pulling them out as outliers – unusual words or phrases that can’t be factorized. This method turned out to be very robust at finding the instances of true outliers.”

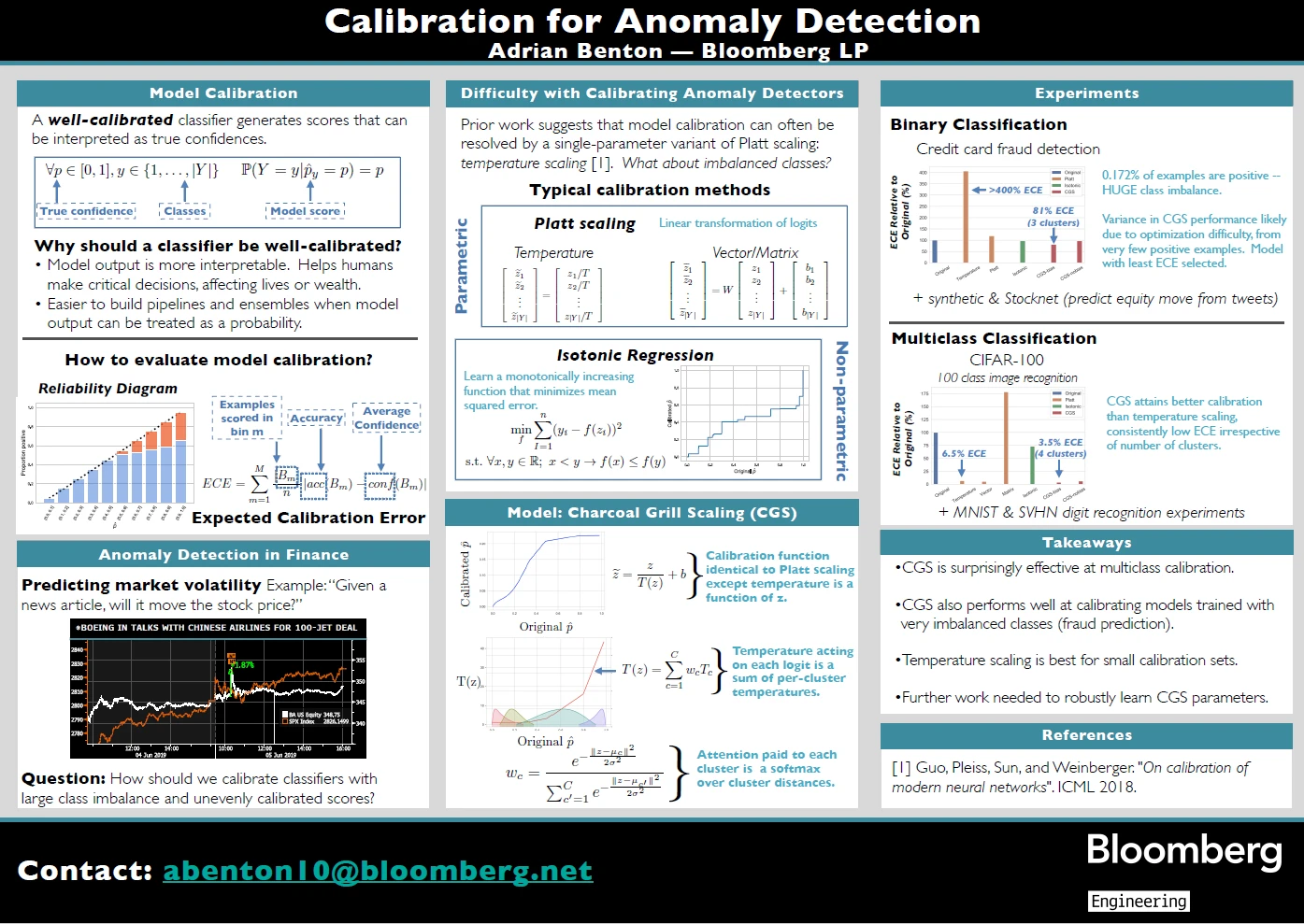

Calibration for Anomaly Detection

A well-calibrated machine learning model produces scores that reflect the true probability that certain events will occur. Any domain where a human must make a decision based on model scores can benefit from well-calibrated models, especially when the financial or human cost of making an ill-informed decision is great. Examples could include automated medical diagnosis, detecting credit card fraud, or predicting market volatility.

A model outputting a score of 0.9 for an input might seem more likely than a score of 0.4, but that 0.9 may not reflect a 90% probability — unless the model is well-calibrated. By calibrating a machine learning model, scores can be reliably interpreted as probabilities.

“Well-calibrated models help decision-makers take action based on these scores and also allow them to combine scores from many models,” said Benton. “Well-calibrated models can be used in an ensemble to produce a better classifier in a long pipeline.”

Models are typically trained on data with balanced classes, with all labels roughly being equally likely. However, this research focuses on calibrating models trained on datasets with many more negative examples than positive ones.

Models can be calibrated in several ways, including temperature scaling. “While this is the simplest technique, it has been shown to be extremely effective across a range of datasets and models,” said Benton. “All you do is divide model scores by a fixed temperature – if the temperature is high, it’ll pull the model scores sharply to 50%, while if temperature is small, it’ll only temper model scores slightly.” For example, a model score of 0.1 would be moved to 0.15, and a score of 0.9 would be lowered to 0.85.

While temperature scaling works very well for standard benchmark datasets, classifiers trained as anomaly detectors require a different approach. Classifiers trained on very imbalanced classes tend to be well-calibrated when predicting negative examples, but are poorly calibrated when predicting anomalous classes.

The proposed model, called Charcoal Grill Scaling, is an extension of temperature scaling that offers more flexibility to calibrate score distributions generated by anomaly detectors. Rather than having a single temperature to reduce model confidence for all predictions, the temperature depends on the region the score is in. This allows the calibration model to avoid shifting scores where the classifier is well calibrated, and reducing model confidence in regions where the classifier is poorly calibrated.