Bloomberg AI Researchers Advance Paraphrase Generation & Chart Summarization in AAAI 2022 Papers

February 24, 2022

During the 36th AAAI Conference on Artificial Intelligence (AAAI 2022) that starts this week (February 22-March 1, 2022), AI researchers and engineers from Bloomberg are showcasing their expertise in artificial intelligence (AI) and natural language processing (NLP) by publishing two (2) papers during the conference – one as part of the Main Conference and another as part of the half-day AAAI-22 Workshop on Scientific Document Understanding (SDU@AAAI-22).

In addition, Anju Kambadur, Head of Bloomberg’s AI Engineering group, will be keynoting the half-day AAAI-22 Workshop on Knowledge Discovery from Unstructured Data in Financial Services (KDF 2022) at 10:15 AM PST on Tuesday, March 1, 2022. His keynote talk, “Search and Discovery in News and Research,” will look at various ways Bloomberg’s decade-long investment in NLP, information retrieval and search, and core machine learning (including deep learning) is enabling the application of autocompletion, query understanding, index enrichment, question answering, summarization, and relevance ranking so that the company’s clients across the global capital markets can discover insightful information from the complexity of unstructured data.

In the papers being published at AAAI 2022, the authors and their collaborators — among them past Bloomberg Data Science Ph.D. Fellow Hao Tan, a recipient of a Ph.D. in computer science from the University of North Carolina at Chapel Hill, and his advisor, Professor Mohit Bansal, who leads the MURGe-Lab (Multimodal Understanding, Reasoning, and Generation for Language Lab), one of the research labs in the UNC NLP group — present contributions to fundamental NLP problems in the areas of paraphrase generation and chart summarization.

We asked the authors of the papers to summarize their research and explain why the results were notable in advancing the state-of-the-art in the field of natural language processing:

Main Conference

Poster Session 2 (Thursday, February 24 @ 4:45-6:30 PM PST)

Oral Session 2 (Thursday, February 24 @ 6:30-7:45 PM PST)

Poster Session 7 (Saturday, February 26, 2022 @ 8:45-10:30 AM PST)

Novelty Controlled Paraphrase Generation with Retrieval Augmented Conditional Prompt Tuning

Jishnu Ray Chowdhury (University of Illinois Chicago), Yong Zhuang and Shuyi Wang (Bloomberg)

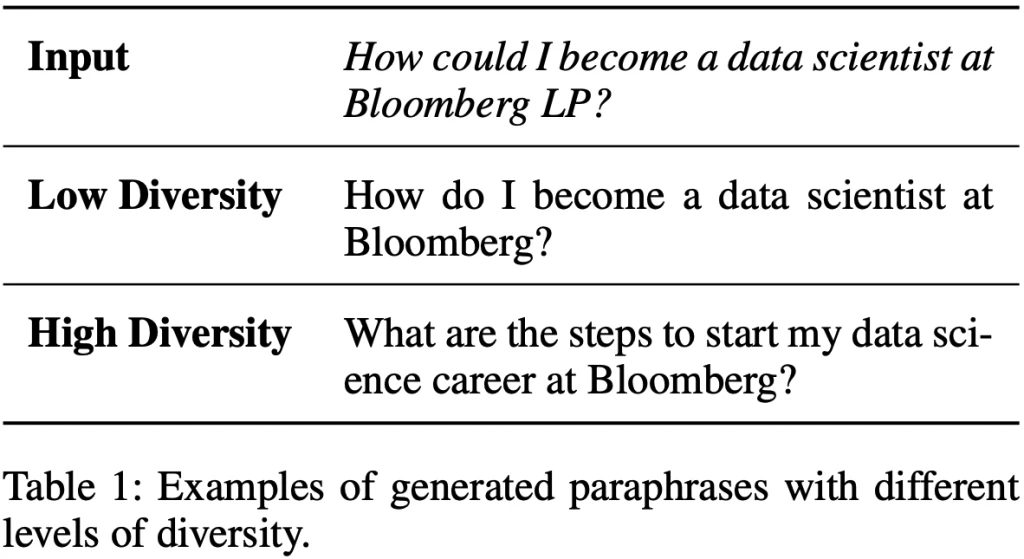

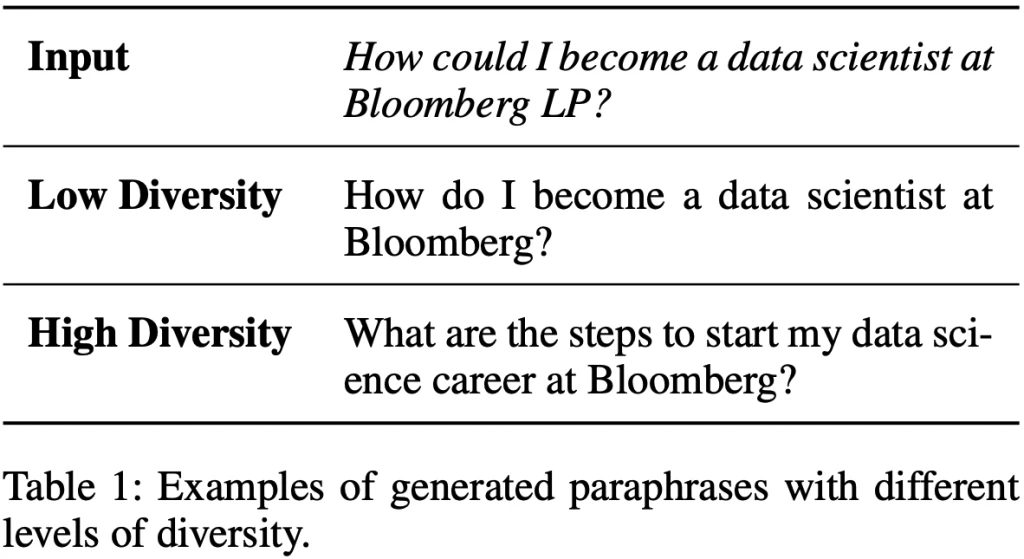

Yong: This work focuses on the task of paraphrase generation, which is a fundamental one in natural language processing. Our focus is on the diversity of generated paraphrase sentences, as high diversity in paraphrase generation improves robustness on many downstream applications by improving generalization. An example of this is natural language question answering, where starting from a seed set of questions used to train the question answering system, we can expand this set with diverse paraphrases. The net effect is a question answering system that is more robust to lexical and semantic diversity. Most existing works pay little attention to diversity when generating paraphrases. We propose a simple model-agnostic method that allows the user to control the level of novelty when generating paraphrases.

Paraphrase novelty refers to how different a generated sentence is from the reference one, while expressing the same meaning. Our work focuses on the problem of allowing a user to control the level of novelty in the generated paraphrases. As is standard now, we rely on generative large-scale pre-trained language models for generation. To this end, we use prompt tuning, and generation is based on the prompting template:

INPUT:<reference-sentence>;PARAPHRASE:_______

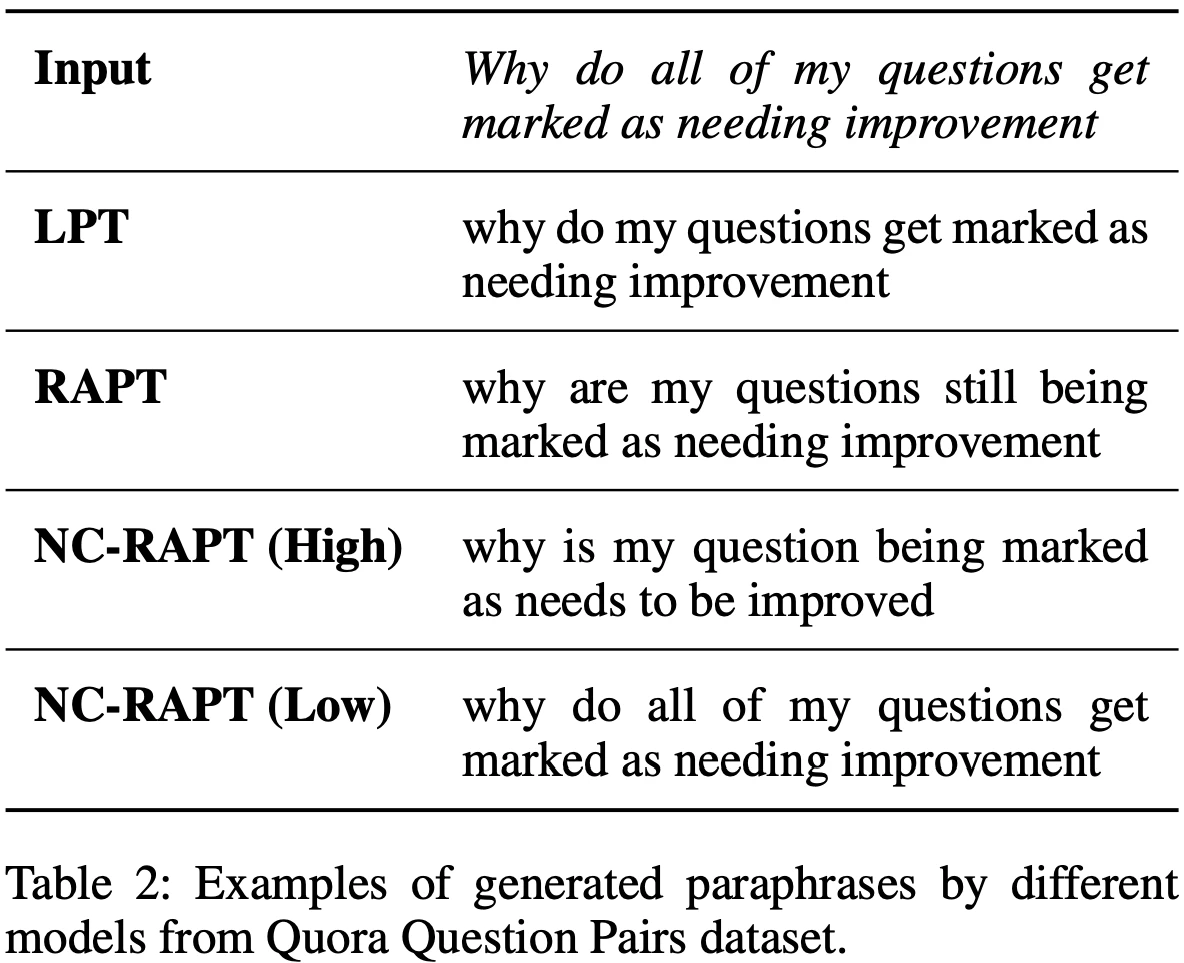

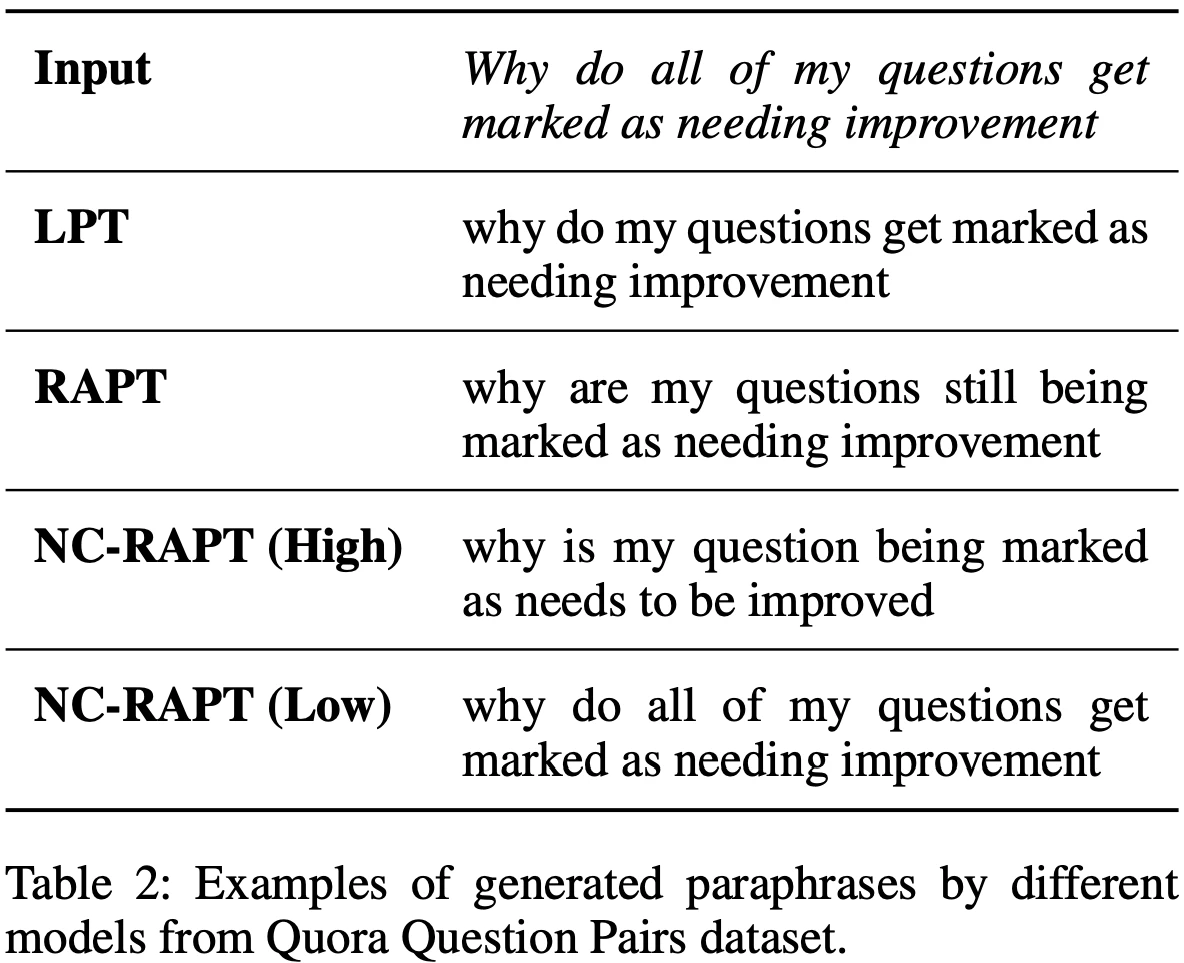

We learn different prefix (INPUT) and infix (PARAPHRASE) embeddings for different novelty classes, such as High, Medium, and Low. Users can then explicitly determine the level of generation by choosing which embeddings they will use. In Table 2 below, we show some example generations by specifying the diversity in our proposed method Novelty Conditioned Retrieval Augmented Prompt Tuning (NC-RAPT). Using prompt tuning saves us from the need to fine-tune the complete large-scale language model, which is an inefficient process. We augment input prompts by retrieving semantically similar inputs from the training dataset. Extensive experimental results demonstrate the effectiveness of our proposed approach. To the best of our knowledge, this is one of the first works utilizing prompt tuning on the task of paraphrase generation.

Why are these results notable?

Shuyi: The large number of trainable parameters is a pain point for large-scale language models since they not only require a long time to train, but also require large amounts of data. By leveraging prompt tuning, the number of traininable parameters is reduced by orders of magnitude. More importantly, we demonstrate that even with a much smaller number of parameters, it is possible to achieve strong results. Also, to the best of our knowledge, this is one of the first works to explore prompt tuning on the task of paraphrase generation.

How does your work advance the state-of-the-art in the field of NLP?

Yong: While there have been many works utilizing large-scale language models (e.g., GPT models) on the task of paraphrase generation, existing works put less emphasis on the novelty of generated sentences. In addition, training large-scale language models is costly in terms of data and compute power. To mitigate these requirements, our work takes advantage of prompt-tuning and demonstrates its effectiveness on the paraphrase generation task. Furthermore, we use a retrieval-based approach to further improve the performance of prompt-tuning, which could be a paradigm shift in the task of paraphrase generation or even a broader research area of generation tasks.

Workshop on Scientific Document Understanding

March 1, 2022 @ 10:35-10:45 AM PST

Scientific Chart Summarization: Datasets and Improved Text Modeling

Hao Tan (UNC Chapel Hill), Chen-Tse Tsai, Yujie He (Bloomberg) and Mohit Bansal (UNC Chapel Hill)

Please summarize your research.

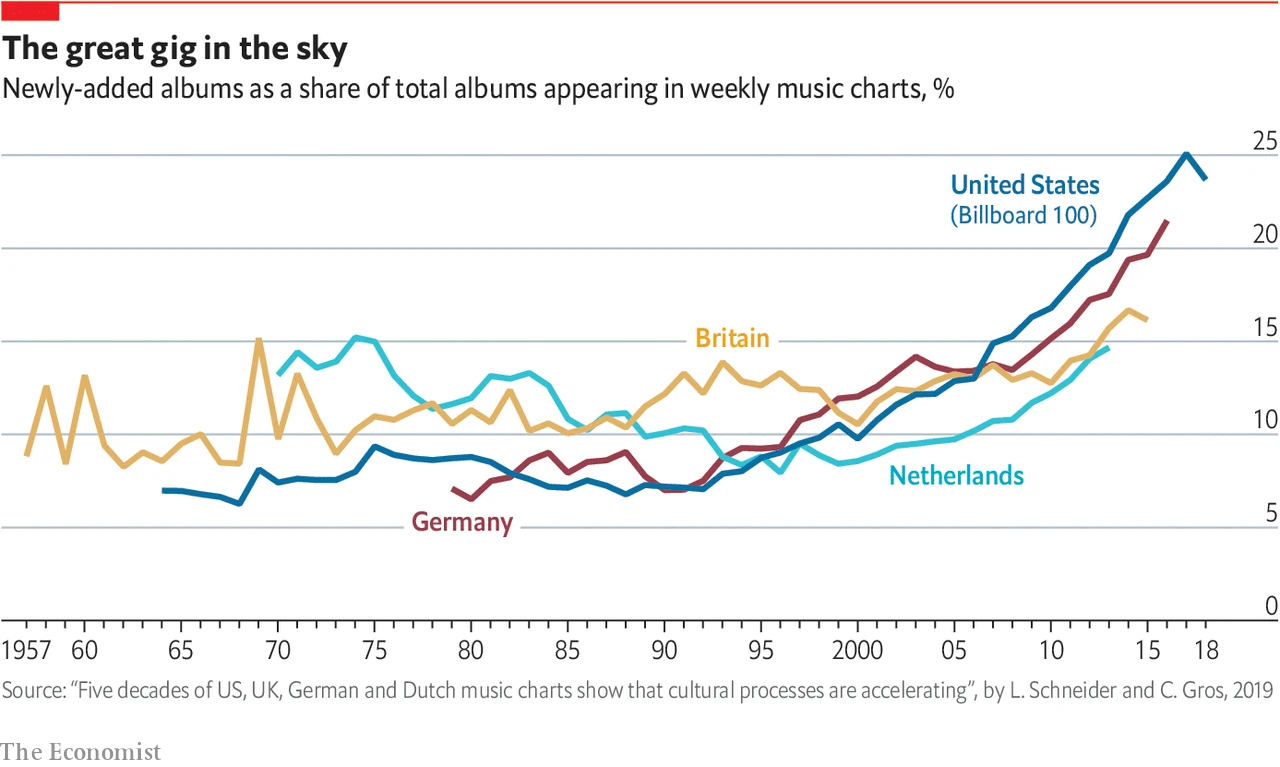

Chen-tse: In this research paper, we explore the task of chart summarization, in which the goal is to generate sentences that describe the salient information in a chart image. Below is an example chart image, with a candidate summarization as: The U.S. became the largest provider of albums around 2005.

Charts usually convey the key messages in a multimodal document. Summarization of these charts make them easy to understand and easy to navigate, which is essential in an era where so much information is available. This is especially important when the chart illustrates something that is not conveyed in the text of a document, something that often occurs in a magazine or newspaper, for example.

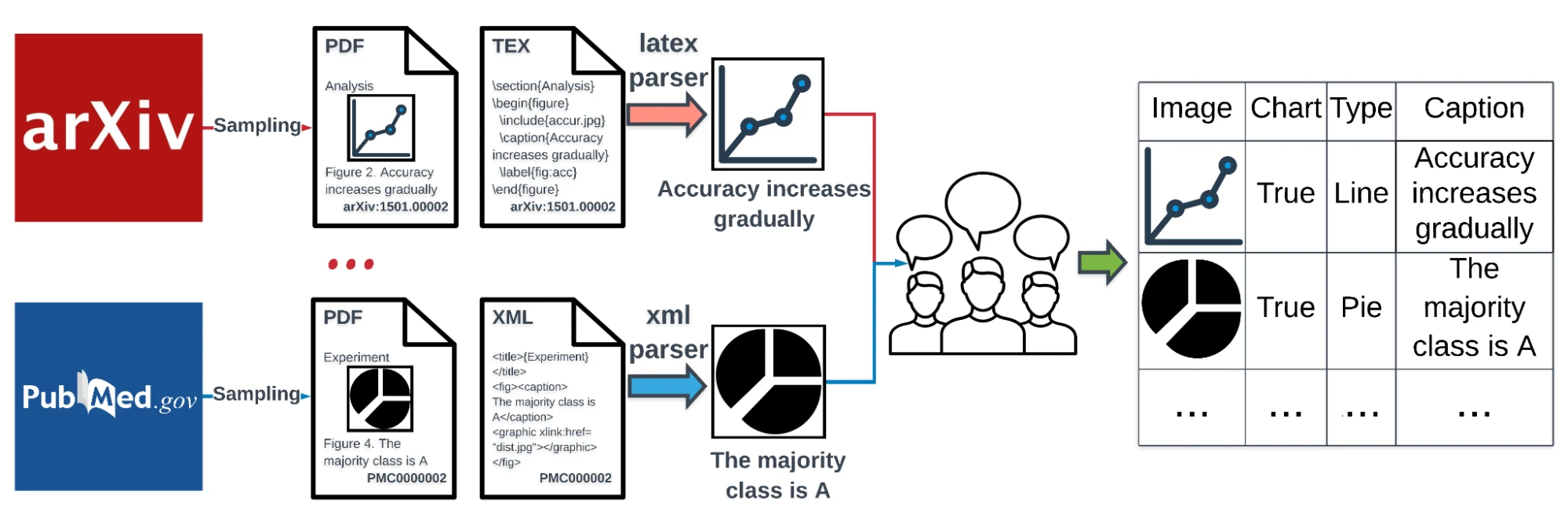

In order to study this chart summarization problem, we first create a dataset of single-chart images and their captions from research papers in PubMed Central (PMC) and arXiv. The overview of our dataset’s creation pipeline is shown in the following figure:

We propose a novel chart summarization model. Besides the standard image captioning component with ResNet and Long Short-Term Memory (LSTM), our model also employs an Optical Character Recognition (OCR) text representation component to capture both the text embedding and its position. The visual and textual representations are then connected to a large pre-trained language decoder via pre-embedding and cross-attention approaches, respectively. Experimental results show that the proposed model performs significantly better than an image captioning baseline: under semi-supervised setting, our model achieves a BLEU score of 4.47 and a CIDEr score of 10.30, both of which outperform the baselines (BLEU score: 2.20; CIDEr score: 4.49).

Why are these results notable?

Yujie: Charts are usually used to visually summarize important information that a document intends to convey. Summarizing the primary message in a chart is an important step towards understanding a multimodal document. Our proposed scientific chart summarization model opens up the opportunities to 1) extract salient information from charts, 2) enable chart search using natural language, 3) and make charts and figures more accessible to blind or visually-impaired readers.

How does your work advance the state-of-the-art in the field of NLP?

Yujie: The scientific chart dataset, proposed model, and comprehensive analysis will be helpful for future research in scientific document understanding, chart summarization, and multimodal learning.