Bloomberg’s AI Researchers & Engineers Publish 4 NLP Papers at NAACL-HLT 2021

June 07, 2021

During the 2021 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-HLT 2021) this week, researchers and engineers from Bloomberg’s AI Group are showcasing their expertise in natural language processing (NLP) and computational linguistics by publishing 4 papers at the conference.

In these papers, the authors and their collaborators — who include Bloomberg Ph.D. Fellow Tianze Shi of Cornell University and computer science Ph.D. student Lisa Bauer of The University of North Carolina at Chapel Hill, who performed her research as an intern in our AI Group — present contributions to both fundamental NLP problems in the areas of syntactic parsing and neural language modeling, and to applications of NLP technology focused on citation worthiness.

We asked the authors to summarize their research and explain why the results were notable in advancing the state-of-the-art in the field of computational linguistics:

Tuesday, June 8, 2021

Session 7E: Machine Learning for NLP: Classification and Structured Prediction Models (9:00-10:20 AM PDT)

Diversity-Aware Batch Active Learning for Dependency Parsing

Tianze Shi (Cornell University), Adrian Benton, Igor Malioutov and Ozan İrsoy (Bloomberg)

Please summarize your research.

Ozan: Modern language technology relies on large amounts of human-annotated data to train accurate models for language understanding. Expensive data annotation is required whenever we develop systems for new languages or new domains of interest. This annotation cost is particularly burdensome for structured tasks like dependency parsing, a core component in many NLP pipelines that analyze syntactic structures from raw texts for further downstream processing. Annotating dependency parse trees requires high levels of linguistic expertise, extensive training of human annotators, and significant investment in terms of annotation time.

Active learning is a paradigm aimed at reducing the amount of human annotation effort needed to train machine learning models by selecting examples for annotation that will improve model accuracy the most. In order to reduce annotation task overhead, this iterative annotation process is often done in batches. One of the challenges in formulating an effective strategy for selecting informative examples in batch active learning is that, while individual sentences in a batch may be informative on their own, they may contain duplicative and redundant information, and the whole batch may not be as informative.

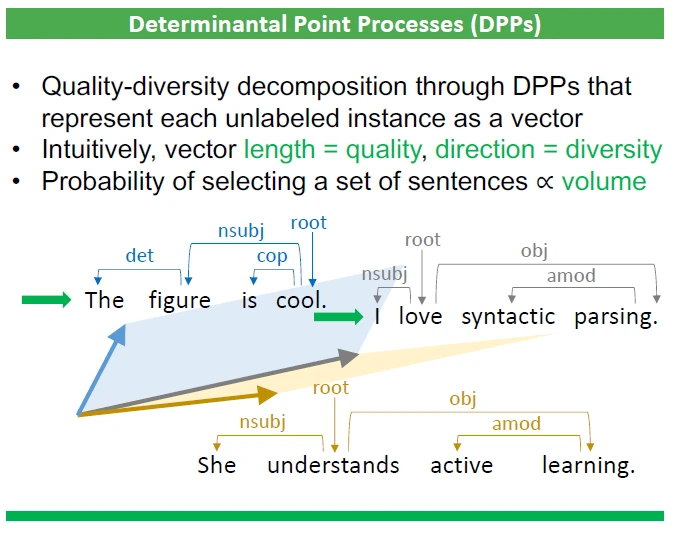

In this work, we propose using Determinantal Point Processes (DPPs), a class of probability distributions over subsets of elements, to select sentences that are both challenging to the parser and also diverse — structurally different from each other.

Why are these results notable? How do they advance the state-of-the-art in the field of natural language processing?

Ozan: We found that using DPPs to select examples for training the parser is indeed helpful. This approach outperforms standard active learning techniques, especially early in the training process, and it is also robust in settings where the entire dataset is highly duplicative. With our proposed DPP-based active learning method, we achieve within 2% labeled attachment score (a standard accuracy measure for dependency parsing) — with only 2% of the full training set. This suggests that our method can significantly reduce annotation costs for bootstrapping dependency parsers for new domains of interest.

Beyond syntactic processing, this work stands to help with the development of downstream models of many other natural language understanding tasks that rely on accurate syntactic parsers — fundamental tasks like question answering, open-domain information extraction, and machine translation, among others.

Session 8E: Syntax: Tagging, Chunking, and Parsing (10:20-11:40 AM PDT)

Learning Syntax from Naturally-Occurring Bracketings

Tianze Shi, Ozan İrsoy, Igor Malioutov (Bloomberg), Lillian Lee (Cornell University)

Please summarize your research.

Igor: Constituency parsing — the analysis of sentences in terms of hierarchical structures — is a core NLP task. The current dominant paradigm is supervised parsing, where statistical parsers are trained on large quantities of annotated parse trees. This approach lends itself well to high accuracy, but requires costly human annotation, which could be a high barrier to entry for developing parsers for new domains and languages. Alternatively, unsupervised parsers can induce structures directly from unlabeled texts, removing the need for annotation completely, but these are more susceptible to error.

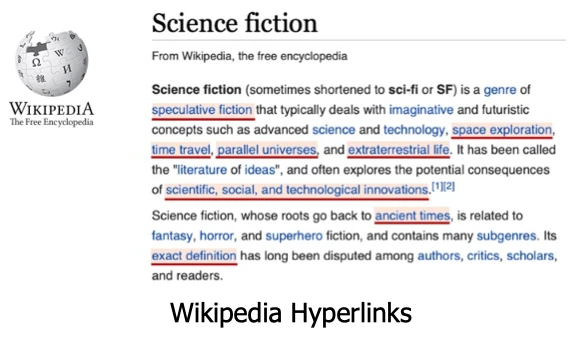

In this work, we explore the middle-ground between supervised and unsupervised approaches: learning from distant supervision by leveraging annotation from other sources and tasks. Here are two examples:

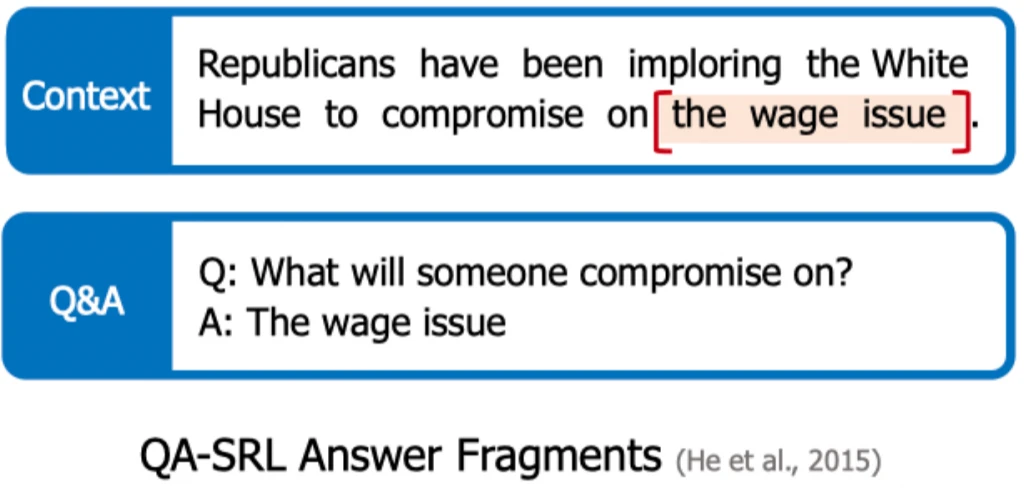

- Wikipedia pages are richly annotated with hyperlinks that point to other pages, and the corresponding anchor text fragments usually form valid syntactic phrases.

- Another source of signal we considered is answer fragments to semantically-oriented questions. When we give short answers to questions, those answers also tend to function as syntactic phrases.

To incorporate these alternative data sources, we modify the learning objective by training discriminative constituency parsers with structured ramp loss and propose two loss functions to directly penalize predictions in conflict with available partial bracketing data. The resulting approach has a simpler formulation than most unsupervised parsers.

Why are these results notable? How do they advance the state-of-the-art in the field of NLP?

Igor: In our experiments, we found that our distantly-supervised parsers achieve accuracies competitive with the state-of-the-art on unsupervised parsing, despite training on smaller amounts of data or using out-of-domain data.

Our work suggests that weak supervision can be a fruitful middle ground between supervised and unsupervised approaches. It offers a pragmatic combination of low annotation cost for the target task at hand, well-defined optimization objective functions for underlying machine-learning models, and strong resulting accuracy.

We hope our work will motivate more research into the utility of other types of distant supervision signals, in both syntactic parsing and beyond. This work has the potential to drive advances in the development of downstream semantic analysis and reasoning models for tasks like question answering for financial and legal domains among others.

Wednesday, June 9, 2021

Session 13A: NLP Applications (10:20-11:40 AM PDT)

On the Use of Context for Predicting Citation Worthiness of Sentences in Scholarly Articles

Rakesh Gosangi, Ravneet Arora, Mohsen Gheisarieha, Debanjan Mahata, Haimin (Raymond) Zhang (Bloomberg)

Please summarize your research.

Rakesh: The goal of citation worthiness is to figure out if a given sentence from a scholarly article requires a citation, as shown in Table 1. Providing accurate citations is very important to scientific writing because it helps readers understand how the research work relates to existing scientific literature. Understanding citation worthiness is useful for recommending citations to authors and also helps normalize the citation process.

![Table-1 Table 1. Sentences with the [Cite] tag are the ones that provide citation to another work](https://assets.bbhub.io/image/v1/resize?width=auto&type=webp&url=https://assets.bbhub.io/image/v1/resize?width=auto&type=webp&url=https://assets.bbhub.io/company/sites/51/2021/06/Table-1-1024x278.png)

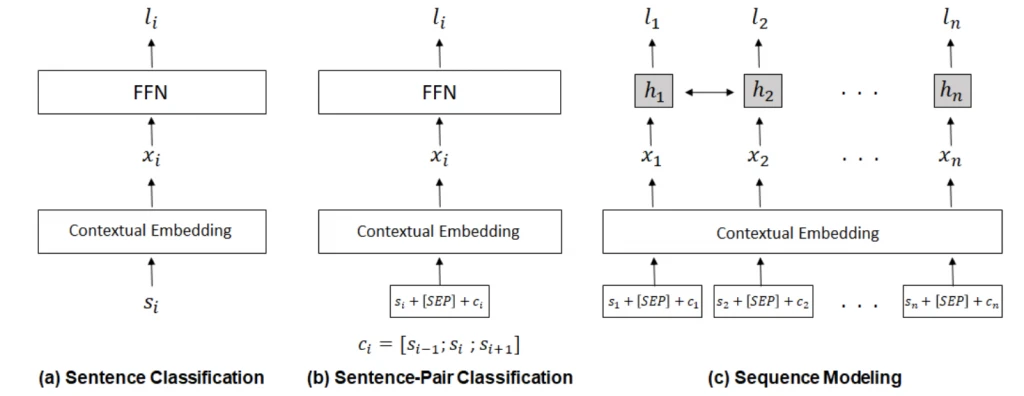

As shown in Table 1, sentences with the [Cite] tag are those that provide a citation to another work. In this paper, we specifically study how the context of the surrounding sentences influence the citation worthiness of a given sentence. We expect that incorporating information from context can help better predict which sentences need citations. As part of this work, we built a new dataset by processing scholarly papers published in the ACL Anthology during the last twenty years. We also proposed a new formulation and models that aid in learning better representation of context for predicting citation worthiness of sentences, as shown in the figure below. Our results demonstrate that context helps improve the prediction of citation worthiness, plus we also obtain state-of-the-art performance for this research problem.

Why are these results notable?

Ravneet: The paper demonstrates the performance improvements achieved by incorporating context into the task of citation worthiness. In contrast to previous studies, experiments in this paper systematically examine how context influences the performance metrics, while also answering the important question of how much context is enough for the task. Results published in the paper show that the citation performance improves overall by around 13% with the inclusion of context. In addition, since different sections of a scientific paper follow their own distinctive writing style and provide different information, the influence of context is also studied with respect to section-level performance.

We have backed up our results with a subjective analysis that reveals three groups of citation trends that benefit from the additional context. Our paper conducts experiments for sentences in scholarly articles, and we believe these findings are relevant to other domains like news, Wikipedia, and legal documents.

How does your work advance the state-of-the-art in the field of NLP?

Debanjan: Understanding the context of the narrative before citing an article in a scientific paper is quite natural for human authors. While there have been regular advances in generative approaches (e.g., GPT-3) that enable NLP models to generate human-like text and author essays, existing works do not look at how to generate sentences for referring to work relevant to scientific papers. Work in this area could help develop intelligent writing assistants for scholarly documents, as well as other domains such as news, where a claim needs a citation of related articles. Imagine a technology that can generate the related work section of the paper you are writing given the context of the portions you have already written. Our modeling approach and the dataset developed as part of our work are a first step towards this goal. Even before thinking about generating citation-worthy text, we need to first identify it, which we do in this work in order to advance the state of NLP in understanding scholarly documents.

Thursday, June 10, 2021

Deep Learning Inside Out (DeeLIO): The 2nd Workshop on Knowledge Extraction and Integration for Deep Learning Architectures

Poster Session B (10:15-11:15 AM PDT)

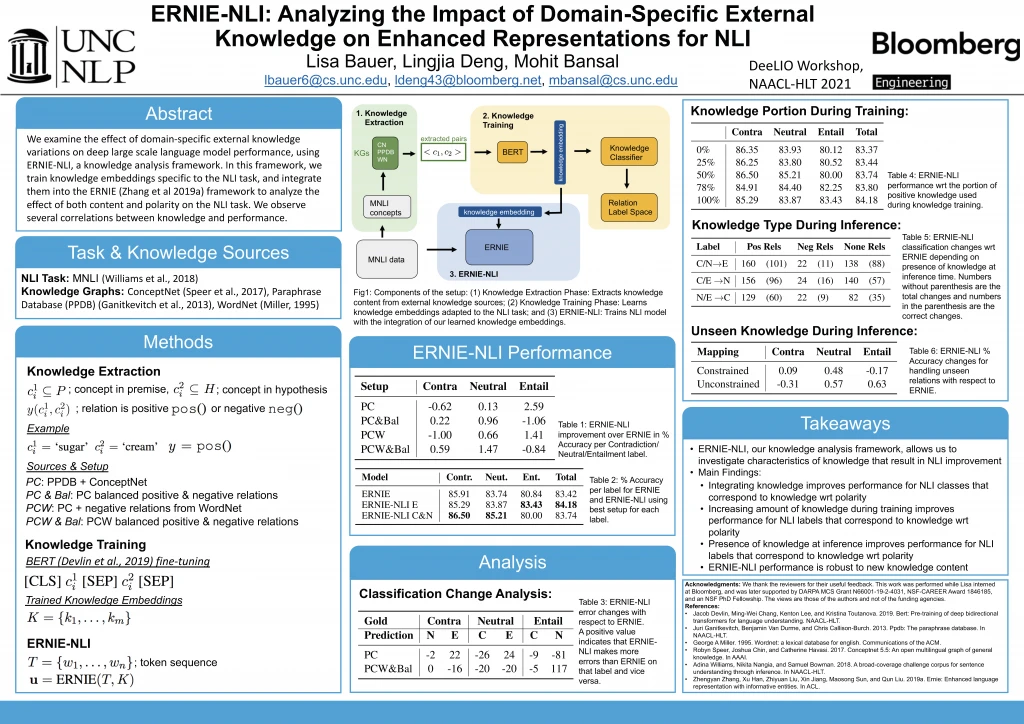

ERNIE-NLI: Analyzing the Impact of Domain-Specific External Knowledge on Enhanced Representations for NLI

Lisa Bauer (UNC Chapel Hill), Lingjia Deng (Bloomberg), Mohit Bansal (UNC Chapel Hill)

Please summarize your research.

Lingjia: This work focuses on Natural Language Inference (NLI), which aims to understand whether a hypothesis is entailed by, contradicts, or is neutral with respect to a premise. For example, given the premise “people cut their expenses for their golden years,” the hypothesis that “people decrease their expenses during retirement” is an entailment. On the other hand, the hypothesis that “People increase their expenses during retirement” is a contradiction. The NLI task requires us to understand the meaning of and relationship between words and phrases in the pair of sentences. Thus, language modeling is a very common and important approach for NLI.

Language models trained on large amounts of data have achieved good performance in the NLI task. However, when applying pre-trained language models to a new task, a new domain, or new data variations, these models do not always perform well and additional knowledge may be needed to guide them. In the example above, it is challenging to know that “golden years” entails “retirement” if we rely only on the context within these two sentences. Introducing appropriate external knowledge is important to the NLI task. Recently, the integration of external knowledge into language models has attracted researchers’ interest (e.g., Neural Natural Language Inference Models Enhanced with External Knowledge by Chen et al., 2018 and ERNIE: Enhanced Language Representation with Informative Entities by Zhang et al., 2019). The goal of this work is to investigate what external knowledge could improve the NLI task and how it could affect performance.

This work proposes a knowledge analysis framework that allows us to adapt external knowledge to the NLI task and to directly control the adapted knowledge input. We also present findings that show strong correlations between the adapted knowledge and downstream performance.

Why are these results notable?

Lingjia: Early work on NLI exploited different features, including logical rules, dependency parsers, and semantics. With the development of large annotated corpora, such as the Stanford Natural Language Inference (SNLI) Corpus and the Multi-Genre NLI (MultiNLI) Corpus, researchers have explored various neural models. Recent work started investigating the introduction of external knowledge to enhance NLI models. However, the external knowledge used was not trained specifically for the NLI task. In this work, we focus instead on converting knowledge relations from different knowledge sources to relationships that are tailored to the NLI task.

We then use this knowledge to illustrate the impact that both knowledge content and representation have on model performance. Such analysis helps explain the changes in the performance of neural models. We performed extensive analysis and experimentation to reveal that there are strong correlations between knowledge polarity and downstream performance.

How does your work advance the state-of-the-art in the field of NLP?

Lingjia: When enhanced with the corresponding external knowledge from various sources, our proposed NLI model achieves better performance on the various NLI categories. Compared to the state-of-the-art work that directly uses external knowledge that is not trained for the NLI task, the external knowledge we’re leveraging is tailored to the NLI task. Further, our NLI model allows us to analyze the impact of different external knowledge on different NLI categories. Even when the knowledge used at inference time shifts (e.g., new knowledge emerges), the model performs robustly.