4 NLP Papers from Bloomberg Researchers Published in “Findings of the ACL: EMNLP 2021” & Conference Workshop

November 09, 2021

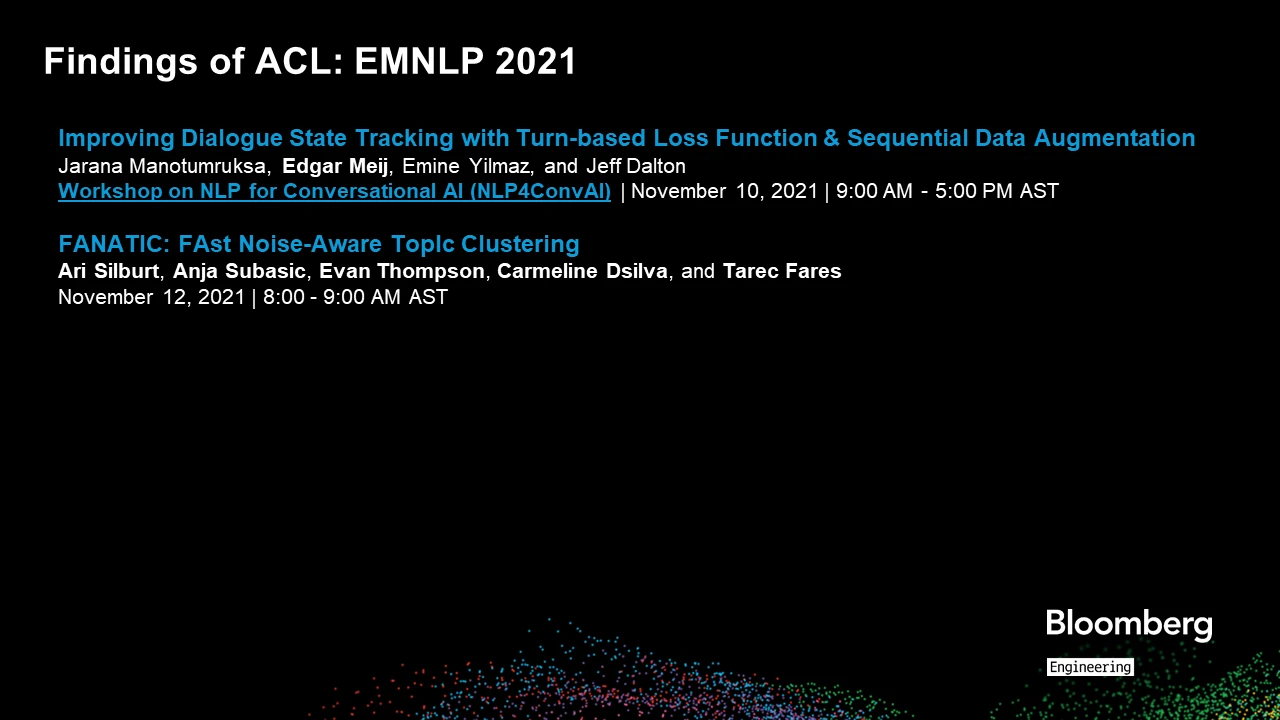

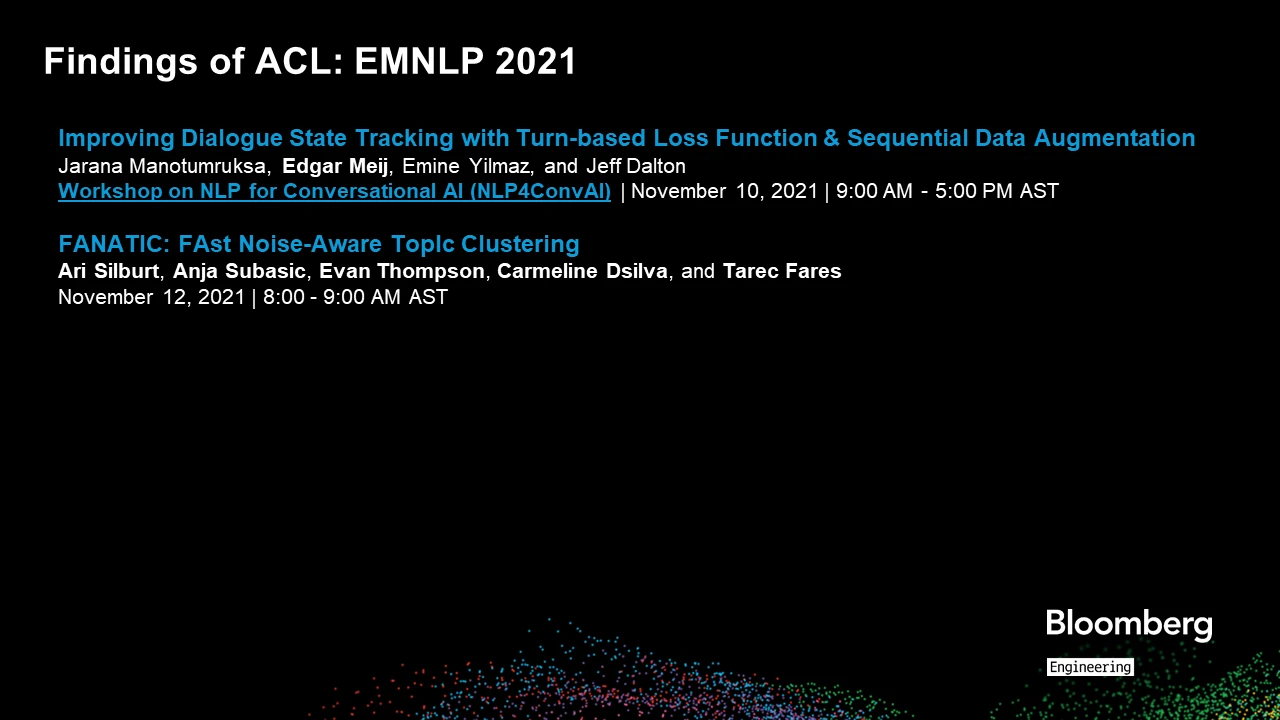

In addition to the four (4) papers published during the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP 2021) main conference this week, AI researchers and engineers from Bloomberg are also showcasing their expertise in natural language processing (NLP) and computational linguistics by publishing two (2) papers in Findings of the Association for Computational Linguistics: EMNLP 2021, and another two (2) papers featured at the co-located Workshop on Insights from Negative Results in NLP.

In addition, BLAW Machine Learning engineer Leslie Barrett and Bloomberg AI Research Scientist Daniel Preoţiuc-Pietro are two of the organizers of the Natural Legal Language Processing Workshop taking place at EMNLP 2021 on Wednesday, November 10, 2021. The goal of this workshop is to bring together researchers and practitioners from around the world who develop NLP techniques for legal data. The invited speakers are two senior legal scholars: John Armour, Professor of Law and Finance at Oxford University, and Sylvie Delacroix, Professor in Law and Ethics at the University of Birmingham.

Since this legal workshop first debuted in 2019, the number of paper submissions has doubled and 30 papers will be presented orally at the workshop. The papers will cover a wide range of topics, from new data sets for legal NLP and transformer models pre-trained on legal corpora to information retrieval, extraction, question answering, classification, parsing, and summarization for legal documents, as well as legal judgment prediction, legal dialogue, legal reasoning, and ethics.

We asked the authors of the Findings and workshop papers to summarize their research and explain why the results were notable in advancing the state-of-the-art in the field of computational linguistics:

Wednesday, November 10, 2021

Findings of the Association for Computational Linguistics: EMNLP 2021; also being presented during Workshop on NLP for Conversational AI (NLP4ConvAI)

Improving Dialogue State Tracking with Turn-based Loss Function & Sequential Data Augmentation

Jarana Manotumruksa, Jeff Dalton, Edgar Meij, Emine Yilmaz

Please summarize your research.

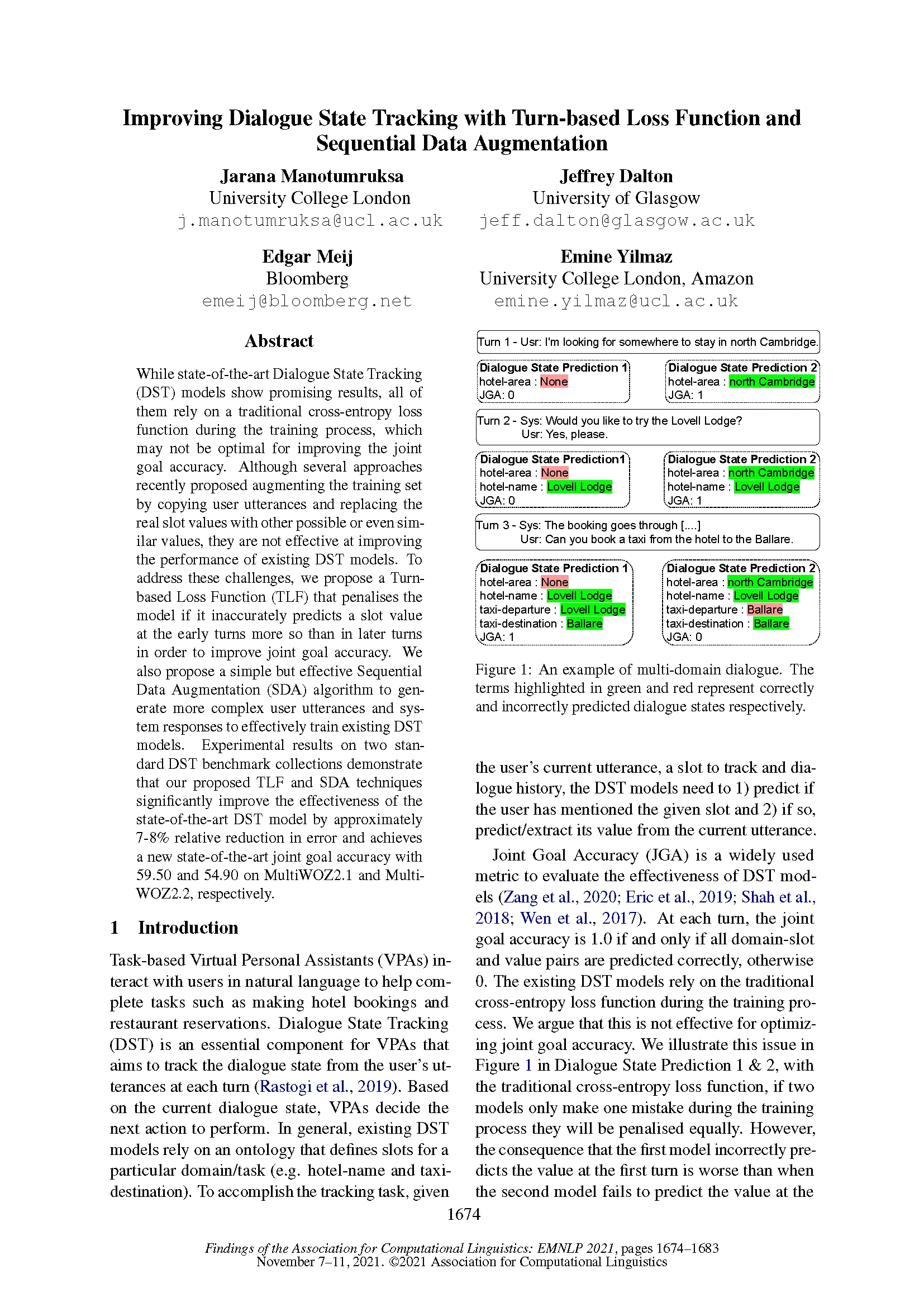

Edgar: We make two main contributions in this paper, both of which are related to improving conversational agents. Dialogue state tracking (DST) is a key component of any such stateful question answering, virtual personal assistant, or other interactive system. DST aims to keep track of what exactly a user is asking for, as well as where they are in the conversation. Based on this information, the model decides how to formulate a follow-up statement or question, or which action to perform.

DST is typically modelled as a slot-filling task, where the system needs to decide whether a user has mentioned a slot, as well as a value for it. For example, when using a conversational agent to book a flight, the model needs to know where the user is flying from, where they are headed, and when they plan to travel. One common way to evaluate such systems is by calculating “Joint Goal Accuracy” at every speaking turn. If, and only if, both slot and value are predicted correctly at a turn, this score is 1. Otherwise, it is 0. As such, the choice of loss function severely impacts the end performance of the model.

We argue that using a traditional function such as cross-entropy loss leads to poor performance. Furthermore, we hypothesise that errors made in the first few turns of a conversation have a much bigger negative impact on user-perceived performance than errors later in the conversation. We therefore design a turn-based loss function (TLF) that penalises mistakes earlier in the conversation more than later ones.

Our second contribution is a data augmentation method that aims to enrich the training dialogues to make them more complex. We hypothesise that such augmented dialogues can help the model better understand the intent, in addition to providing additional relevant context for the slots and their values.

Why is this research notable? How will it help advance the state-of-the-art in the field?

Using comprehensive experiments on multiple benchmark datasets, we find that both methods significantly improve effectiveness over the existing state-of-the-art by as much as 8.26% relative reduction in error on the MultiWOZ2.2 test collection. A detailed analysis reveals that the proposed methods are robust with respect to hyperparameter settings, choice of model, and specific domains.

Findings of the Association for Computational Linguistics: EMNLP 2021

FANATIC: FAst Noise-Aware TopIc Clustering

Ari Silburt, Anja Subasic, Evan Thompson, Carmeline Dsilva, Tarec Fares

Please summarize your research.

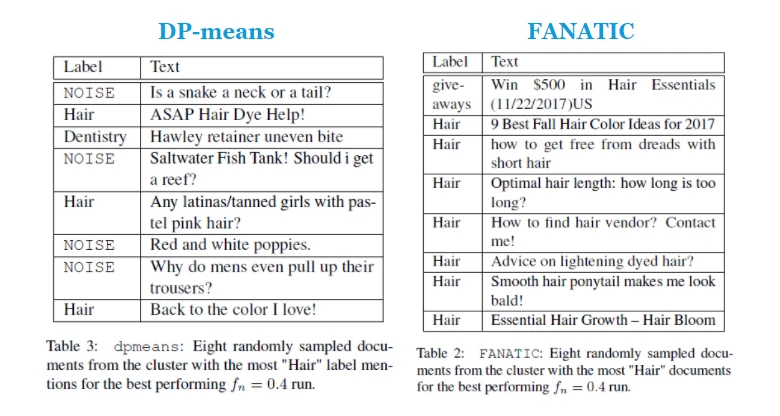

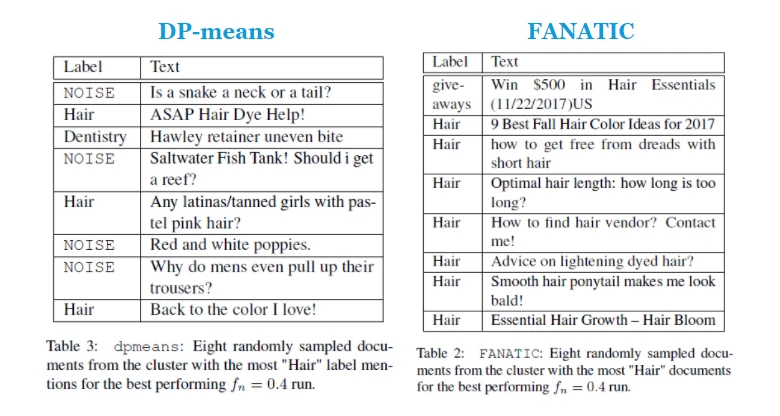

Ari: Data sources today, including product reviews, beliefs, values, etc., contain a wealth of information. However, this information is often embedded in a sea of what we call “topic noise” — data that is irrelevant to any desired topic and should be filtered out. Most current clustering algorithms struggle with topic noise. For example, the two tables below show eight randomly sampled documents from the cluster with the most “Hair” topic mentions for two different clustering algorithms: DP-means (Kulis and Jordan, 2012) and FANATIC (our algorithm). As can be seen, the DP-means cluster contains a lot of “topic noise” – sample texts that are irrelevant to the desired topic. In contrast, the FANATIC cluster is much cleaner and more accurately describes the topic of “Hair.”

In this research paper, we present a new document clustering algorithm, FANATIC, which is more capable than other algorithms at identifying and filtering topic noise, while still maintaining high topic coverage in clusters. In addition, FANATIC scales competitively with existing clustering algorithms, making it the obvious choice when clustering documents in the presence of topic noise. FANATIC extends DP-means, a non-parametric k-means algorithm, with additional features tailored to improve the document clustering process with respect to topic noise.

Why is this research notable? How will it help advance the state-of-the-art in the field?

The volume and diversity of data is greater than ever, and it increasingly needs to be refined and filtered according to the task at hand to be useful. In particular, chat and social media data are rife with topic noise. Since most clustering algorithms (e.g., k-means, agglomerative clustering) do not have any method for filtering topic noise, this guarantees that any impurities will propagate into the results, unless the user manually pre/post-filters disjointly from clustering, which adds risk. In contrast, FANATIC clusters and filters topic noise jointly, resulting in higher quality clusters, thereby ultimately helping lead to better business insights and decisions.

Wednesday, November 10, 2021

Workshop on Insights from Negative Results in NLP (10 AM AST)

Comparing Euclidean and Hyperbolic Embeddings on the WordNet Nouns Hypernymy Graph

Sameer Bansal, Adrian Benton

Please summarize your research.

Adrian: Word embeddings are important building blocks in NLP, where each word in our vocabulary is represented as a vector, a fixed-length list of numbers. Prior work has shown that if we consider word embeddings as points in a vector space, the distances between those words often capture notions of semantic and syntactic similarity. This means that words that are close often have similar meanings (e.g., all words describing a profession are clustered in one region of the space) or serve the same function in a sentence (e.g., adverbs are clustered in one part of the space and nouns in another).

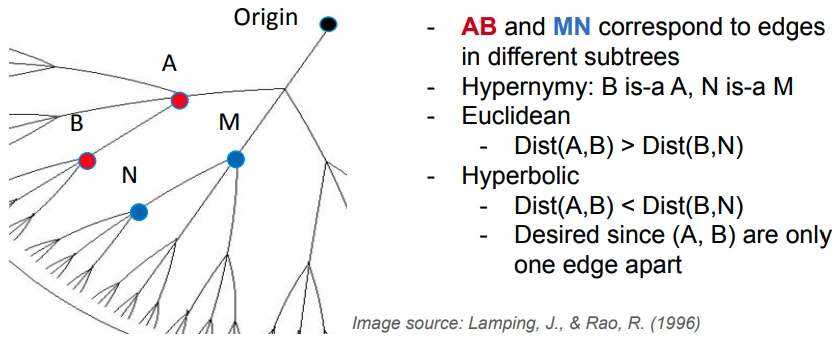

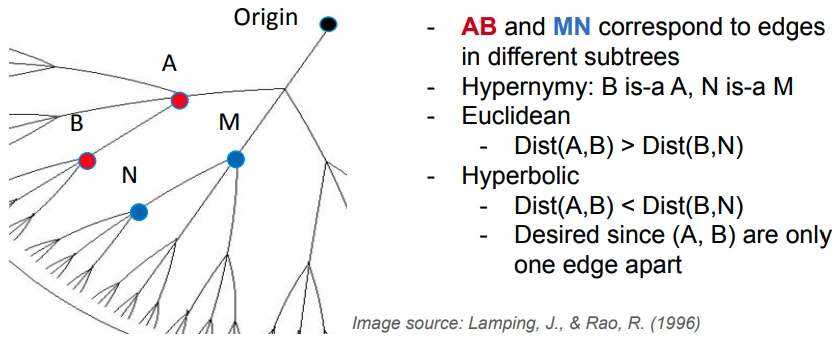

One particular type of relation between words is hypernymy, basically the “is-a” relation. For example, PLANT “is-a” kind of ORGANISM; therefore, ORGANISM is a hypernym of PLANT. English nouns can be arranged in a tree, where words that are direct hypernyms of each other are connected by an edge (i.e., the noun hypernymy tree). The 2017 NeurIPS paper, “Poincaré Embeddings for Learning Hierarchical Representations,” proposed an elegant solution for embedding tree nodes (e.g., the nouns in the hypernymy tree below), such that distances between nodes in the embedding space are faithful to the relationships between distances within the tree. The authors’ solution was to embed nodes in hyperbolic space.

For typical word embeddings, we measure the distance between points “as the crow flies,” distance along a straight line, which is how we normally think of distances. Hyperbolic space acts differently in that distances between points actually increase as these points lie farther from the origin. This is perfect for embedding tree nodes, as the number of leaf nodes in a tree increases with the depth of the tree. This means we can arrange all the leaves in the tree at the edge of a ball centered at the origin and, even though they appear close, the distance between each of those leaves is actually very large in hyperbolic space. The above 2017 NeurIPS paper empirically demonstrates that embedding words in hyperbolic space yields far more concise representations of nodes across a wide variety of trees than typical (Euclidean) embeddings, including the nouns in the hypernym tree from WordNet.

In our work, we simply replicate one experiment from “Poincaré Embeddings for Learning Hierarchical Representations,” embedding nouns in the WordNet nouns hypernymy tree in both hyperbolic and Euclidean (typical word embedding) space. While prior work found that Euclidean embeddings cannot represent this tree using even 200 dimensions, we find that Euclidean embeddings can represent this tree just as well as hyperbolic — with at least 50 dimensions. With at least 100 dimensions, they can represent these trees even more faithfully than hyperbolic embeddings. With help from the original authors, we were able to identify one possible cause of the poor baseline performance: an unnecessary restriction on how far away these embeddings could be from the origin could account for the relatively poor performance of the original Euclidean embeddings.

Why is this research notable? How will it help advance the state-of-the-art in the field?

The original results on embedding in the nouns hypernymy tree were striking: Euclidean embeddings achieved nowhere near the reconstruction error performance of hyperbolic embeddings. We were very excited after reading this paper, but immediately encountered problems replicating those original results. We published our work since we believe it is important for others to have a clear picture of where hyperbolic embeddings could be useful before diving into this body of research.

Replicability is a fundamental precondition for making progress in science. Our work calls into question the efficacy of hyperbolic embeddings relative to standard word embeddings — at least when sufficient dimensions are allowed for learning typical word embeddings. We still find that hyperbolic embeddings are far more effective at embedding trees with very few dimensions, which agrees with the original paper’s thesis.

Note, our work was made possible thanks to the original authors releasing a codebase that enabled us to replicate their experiments. Without this, reproducing their experiments would have been much more difficult.

Wednesday, November 10, 2021

Workshop on Insights from Negative Results in NLP (3 PM AST)

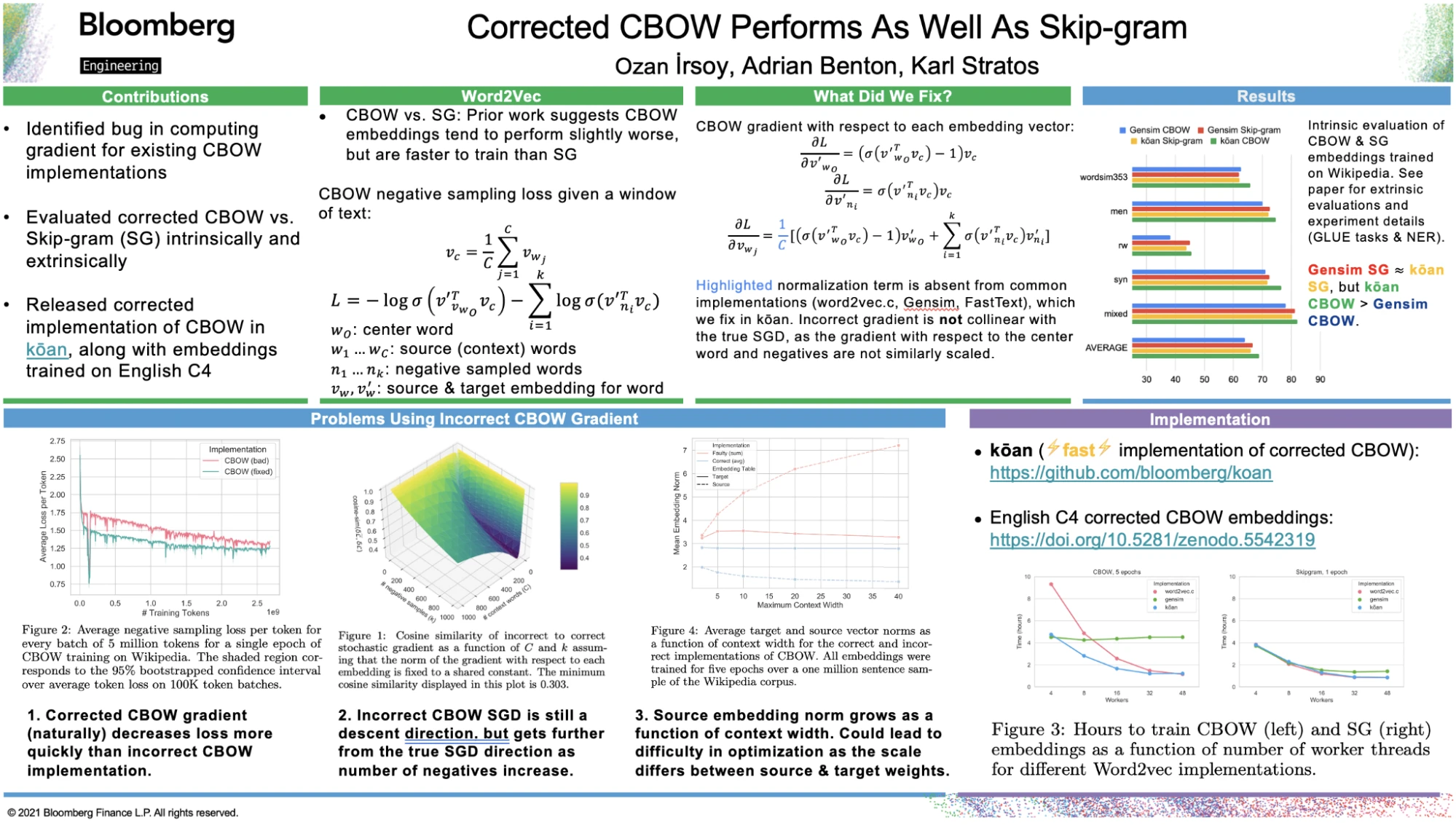

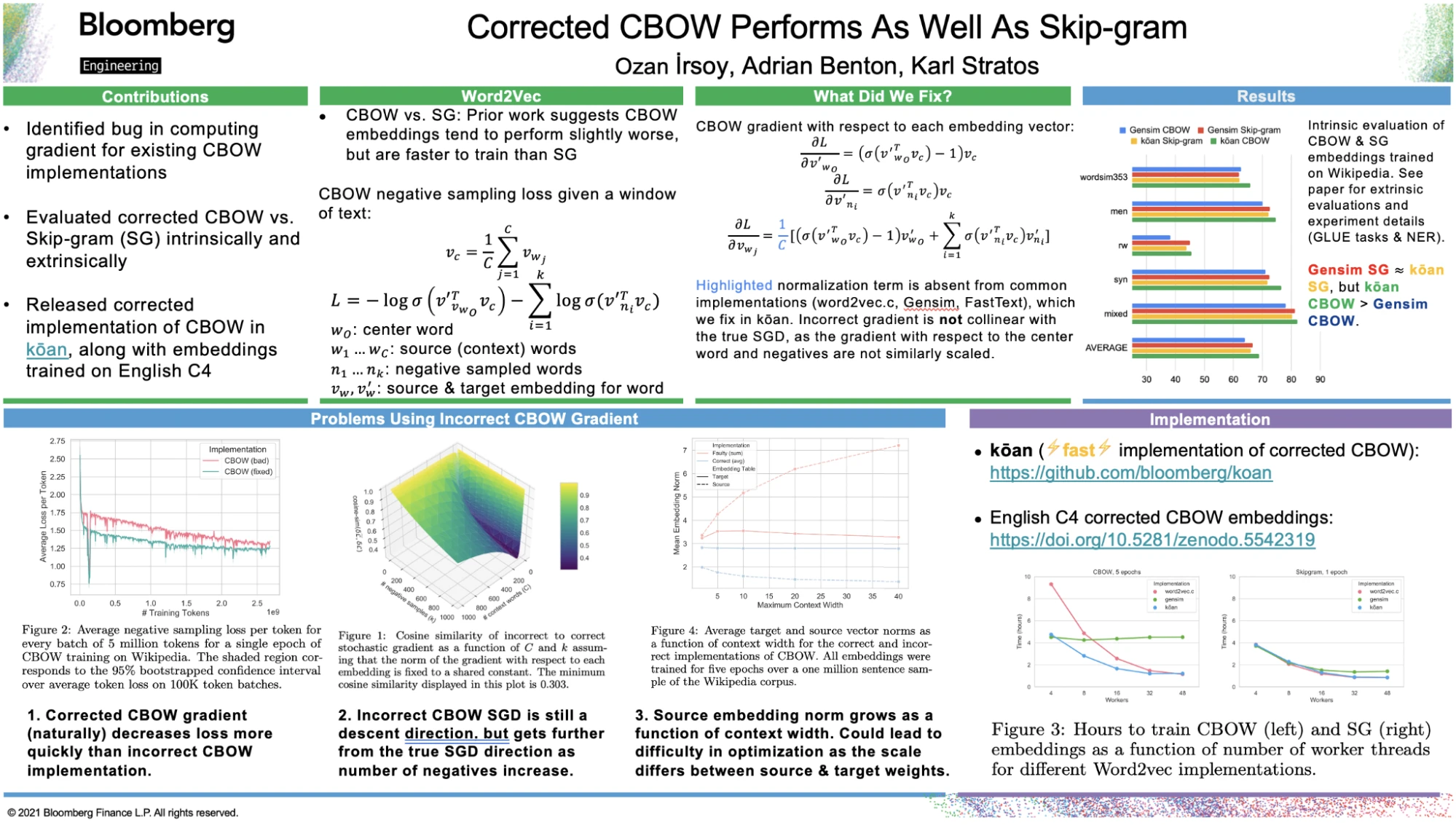

Corrected CBOW Performs as well as Skip-gram

Ozan İrsoy, Adrian Benton, Karl Stratos

Please summarize your research.

Ozan: Word2vec is a commonly-used algorithm for learning vector representations of words, called word embeddings. Word embeddings, a workhorse in NLP, are typically fed as input to models solving a variety of NLP tasks, including named entity recognition, relation extraction, and sentiment analysis among many others. Popular implementations of Word2vec come in two flavors: continuous bag-of-words (CBOW) and Skip-gram. While CBOW embeddings are faster to train than Skip-gram, prior work has found that they tend to be less performant than Skip-gram.

In this workshop paper, our main contribution is the following: we report a subtle bug in existing CBOW implementations. The bug appears in how the gradient is computed with respect to a subset of CBOW parameters. Although this bug is minor — just a missing division term in the gradient computation — we found that fixing it improves the training process, yielding lower training loss while training on less text. This results in CBOW embeddings that achieve intrinsic and downstream performance on par with Skip-gram. We also consider other repercussions of the CBOW bug, such as exploring under which parameter configurations it might be most harmful, and monitoring statistics related to CBOW parameters as training unfolds.

Why is this research notable? How will it help advance the state-of-the-art in the field?

While this bug is subtle, CBOW and Skip-gram are heavily used throughout the NLP community. By fixing this bug, we hope to enable the training of strong Word2vec embeddings on large corpora, since CBOW is faster to train than Skip-gram. We release kōan, our corrected CBOW implementation, as well as CBOW embeddings trained on the English colossal, cleaned Common Crawl corpus (C4) — over a terabyte of web text.

Although it is typical to rely on automatic differentiation libraries to compute gradients automatically, manually implementing gradient computation by hand is necessary for efficient training of these embeddings. Following best practices for ensuring that the manually computed gradients are correct (e.g., finite difference checks) should be obligatory and would have spotted this bug before the original word2vec.c code was released in 2013. Our work serves as a cautionary tale for ensuring quality in released research code. Machine learning bugs can be very subtle and pass quietly under the community’s radar for many years.